Interaction-Based Distributed Learning in Cyber-Physical and Social Networks

Paper and Code

Jun 13, 2017

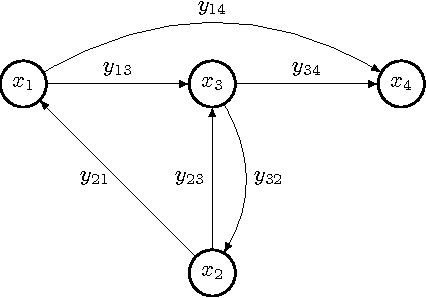

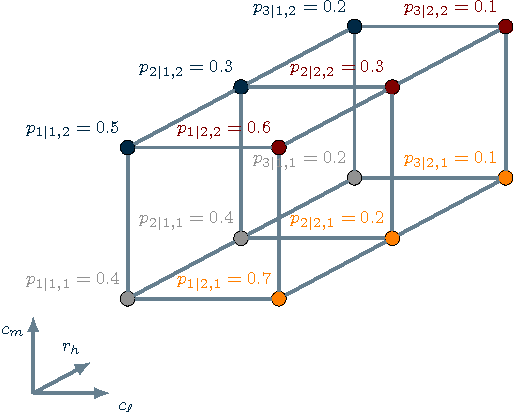

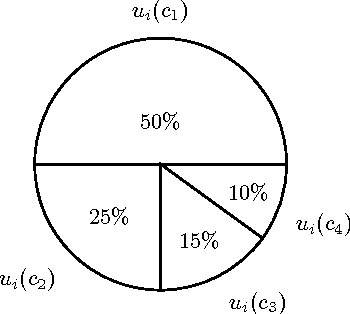

In this paper we consider a network scenario in which agents can evaluate each other according to a score graph that models some physical or social interaction. The goal is to design a distributed protocol, run by the agents, allowing them to learn their unknown state among a finite set of possible values. We propose a Bayesian framework in which scores and states are associated to probabilistic events with unknown parameters and hyperparameters respectively. We prove that each agent can learn its state by means of a local Bayesian classifier and a (centralized) Maximum-Likelihood (ML) estimator of the parameter-hyperparameter that combines plain ML and Empirical Bayes approaches. By using tools from graphical models, which allow us to gain insight on conditional dependences of scores and states, we provide two relaxed probabilistic models that ultimately lead to ML parameter-hyperparameter estimators amenable to distributed computation. In order to highlight the appropriateness of the proposed relaxations, we demonstrate the distributed estimators on a machine-to-machine testing set-up for anomaly detection and on a social interaction set-up for user profiling.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge