Inspect Transfer Learning Architecture with Dilated Convolution

Paper and Code

Nov 20, 2019

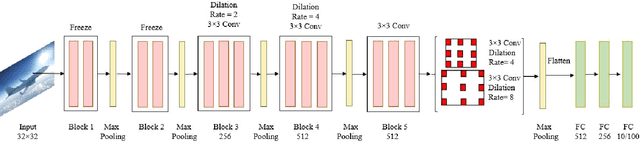

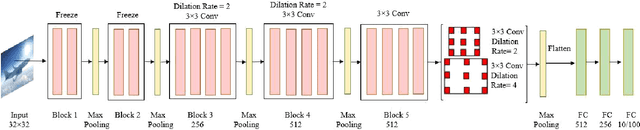

There are many award-winning pre-trained Convolutional Neural Network (CNN), which have a common phenomenon of increasing depth in convolutional layers. However, I inspect on VGG network, which is one of the famous model submitted to ILSVRC-2014, to show that slight modification in the basic architecture can enhance the accuracy result of the image classification task. In this paper, We present two improve architectures of pre-trained VGG-16 and VGG-19 networks that apply transfer learning when trained on a different dataset. I report a series of experimental result on various modification of the primary VGG networks and achieved significant out-performance on image classification task by: (1) freezing the first two blocks of the convolutional layers to prevent over-fitting and (2) applying different combination of dilation rate in the last three blocks of convolutional layer to reduce image resolution for feature extraction. Both the proposed architecture achieves a competitive result on CIFAR-10 and CIFAR-100 dataset.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge