Inference Using Message Propagation and Topology Transformation in Vector Gaussian Continuous Networks

Paper and Code

Feb 13, 2013

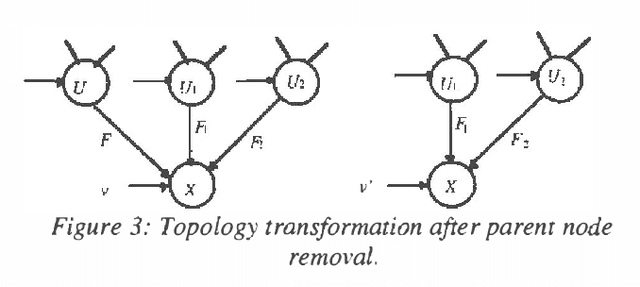

We extend Gaussian networks - directed acyclic graphs that encode probabilistic relationships between variables - to its vector form. Vector Gaussian continuous networks consist of composite nodes representing multivariates, that take continuous values. These vector or composite nodes can represent correlations between parents, as opposed to conventional univariate nodes. We derive rules for inference in these networks based on two methods: message propagation and topology transformation. These two approaches lead to the development of algorithms, that can be implemented in either a centralized or a decentralized manner. The domain of application of these networks are monitoring and estimation problems. This new representation along with the rules for inference developed here can be used to derive current Bayesian algorithms such as the Kalman filter, and provide a rich foundation to develop new algorithms. We illustrate this process by deriving the decentralized form of the Kalman filter. This work unifies concepts from artificial intelligence and modern control theory.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge