Incremental Learning of Event Definitions with Inductive Logic Programming

Paper and Code

Nov 22, 2014

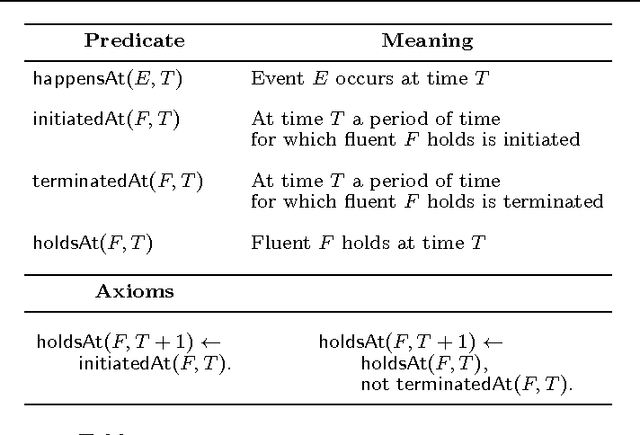

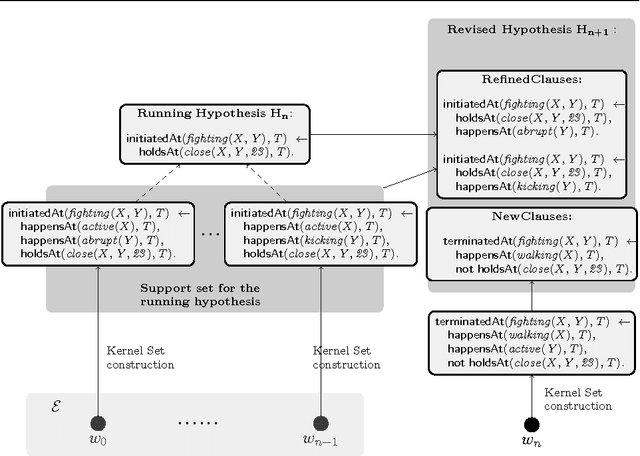

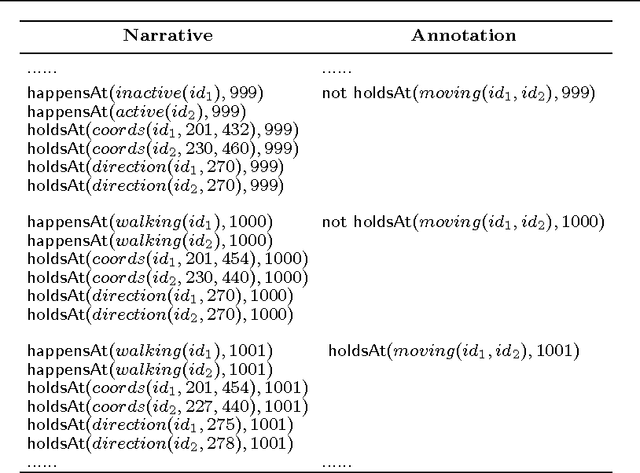

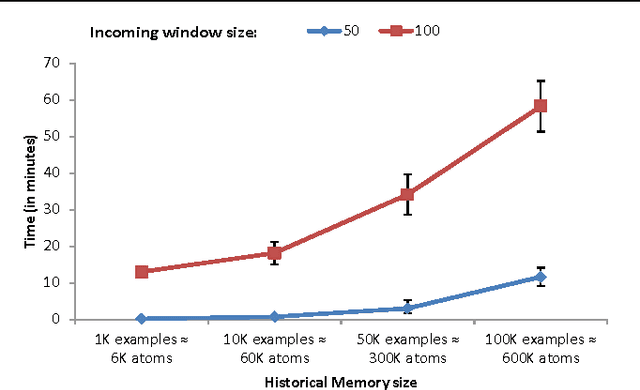

Event recognition systems rely on properly engineered knowledge bases of event definitions to infer occurrences of events in time. The manual development of such knowledge is a tedious and error-prone task, thus event-based applications may benefit from automated knowledge construction techniques, such as Inductive Logic Programming (ILP), which combines machine learning with the declarative and formal semantics of First-Order Logic. However, learning temporal logical formalisms, which are typically utilized by logic-based Event Recognition systems is a challenging task, which most ILP systems cannot fully undertake. In addition, event-based data is usually massive and collected at different times and under various circumstances. Ideally, systems that learn from temporal data should be able to operate in an incremental mode, that is, revise prior constructed knowledge in the face of new evidence. Most ILP systems are batch learners, in the sense that in order to account for new evidence they have no alternative but to forget past knowledge and learn from scratch. Given the increased inherent complexity of ILP and the volumes of real-life temporal data, this results to algorithms that scale poorly. In this work we present an incremental method for learning and revising event-based knowledge, in the form of Event Calculus programs. The proposed algorithm relies on abductive-inductive learning and comprises a scalable clause refinement methodology, based on a compressive summarization of clause coverage in a stream of examples. We present an empirical evaluation of our approach on real and synthetic data from activity recognition and city transport applications.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge