Improving Graph Property Prediction with Generalized Readout Functions

Paper and Code

Sep 21, 2020

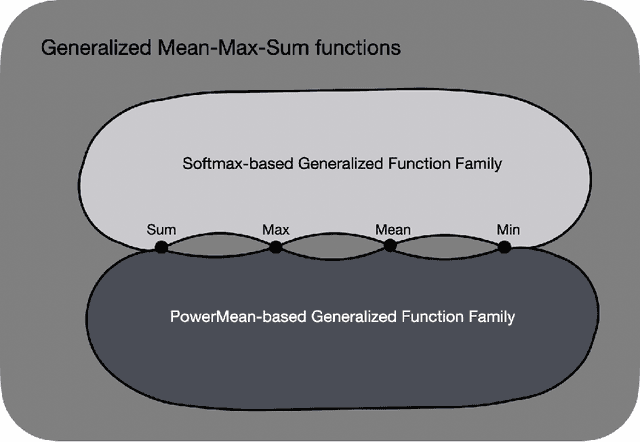

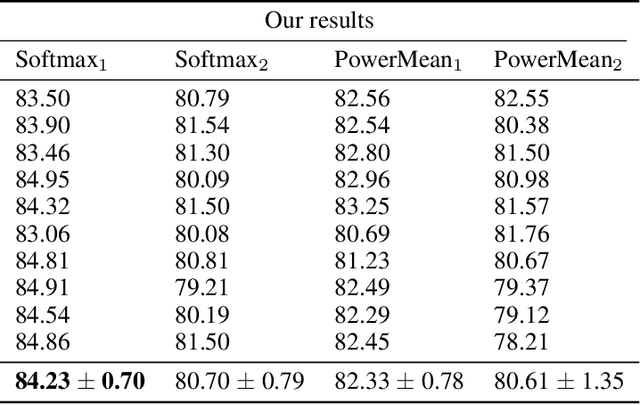

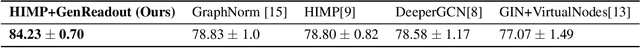

Graph property prediction is drawing increasing attention in the recent years due to the fact that graphs are one of the most general data structures since they can contain an arbitrary number of nodes and connections between them, and it is the backbone for many different tasks like classification and regression on such kind of data (networks, molecules, knowledge bases, ...). We introduce a novel generalized global pooling layer to mitigate the information loss that typically occurs at the Readout phase in Message-Passing Neural Networks. This novel layer is parametrized by two values ($\beta$ and $p$) which can optionally be learned, and the transformation it performs can revert to several already popular readout functions (mean, max and sum) under certain settings, which can be specified. To showcase the superior expressiveness and performance of this novel technique, we test it in a popular graph property prediction task by taking the current best-performing architecture and using our readout layer as a drop-in replacement and we report new state of the art results. The code to reproduce the experiments can be accessed here: https://github.com/EricAlcaide/generalized-readout-phase

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge