Improved symbolic drum style classification with grammar-based hierarchical representations

Paper and Code

Jul 24, 2024

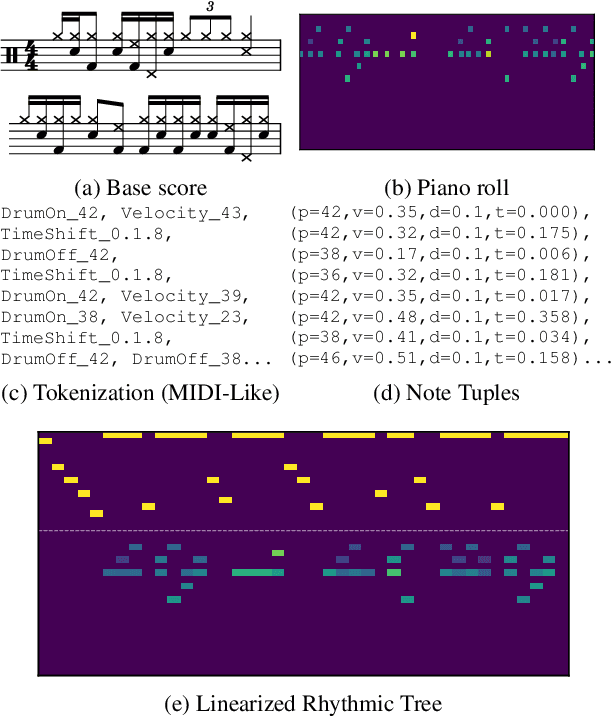

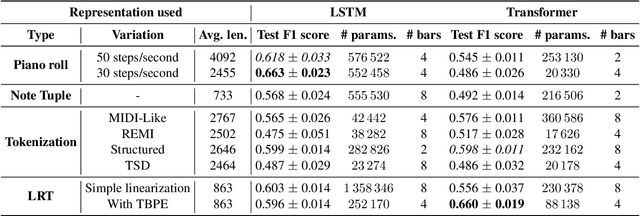

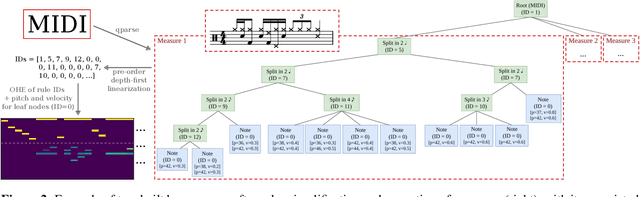

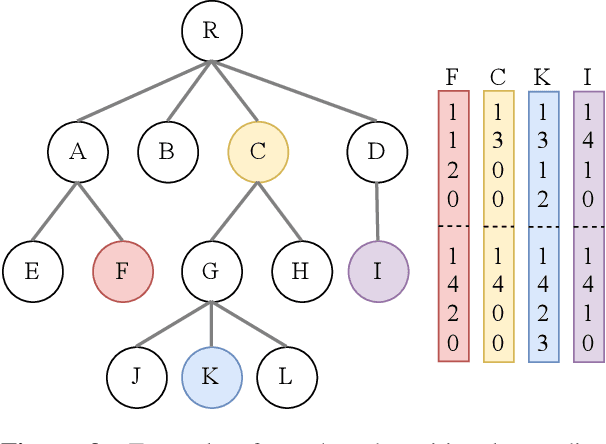

Deep learning models have become a critical tool for analysis and classification of musical data. These models operate either on the audio signal, e.g. waveform or spectrogram, or on a symbolic representation, such as MIDI. In the latter, musical information is often reduced to basic features, i.e. durations, pitches and velocities. Most existing works then rely on generic tokenization strategies from classical natural language processing, or matrix representations, e.g. piano roll. In this work, we evaluate how enriched representations of symbolic data can impact deep models, i.e. Transformers and RNN, for music style classification. In particular, we examine representations that explicitly incorporate musical information implicitly present in MIDI-like encodings, such as rhythmic organization, and show that they outperform generic tokenization strategies. We introduce a new tree-based representation of MIDI data built upon a context-free musical grammar. We show that this grammar representation accurately encodes high-level rhythmic information and outperforms existing encodings on the GrooveMIDI Dataset for drumming style classification, while being more compact and parameter-efficient.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge