Improved Anomaly Detection through Conditional Latent Space VAE Ensembles

Paper and Code

Oct 16, 2024

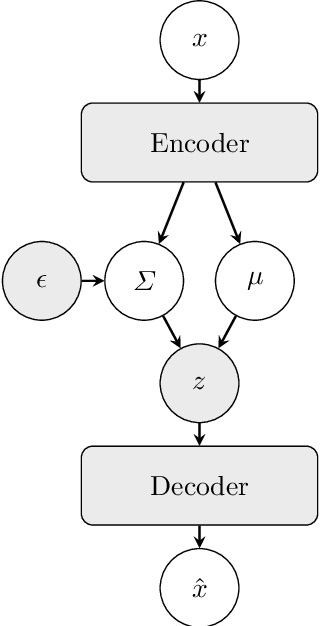

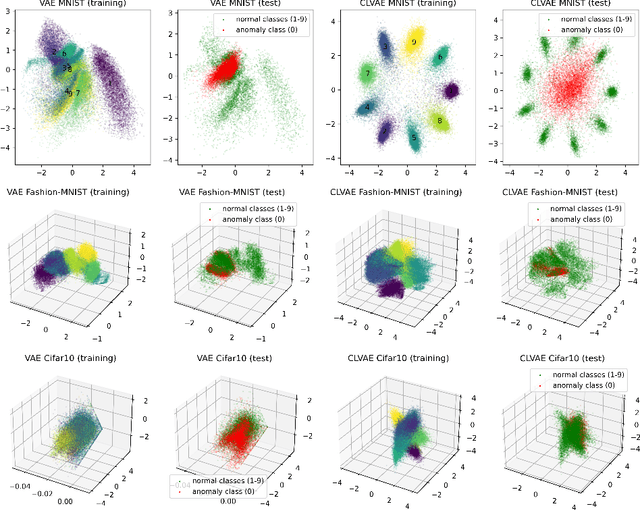

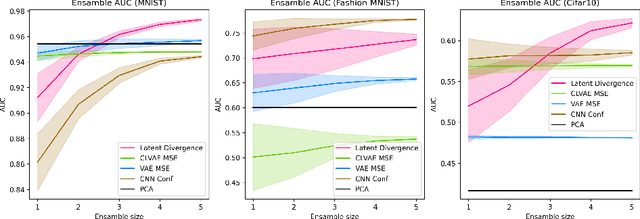

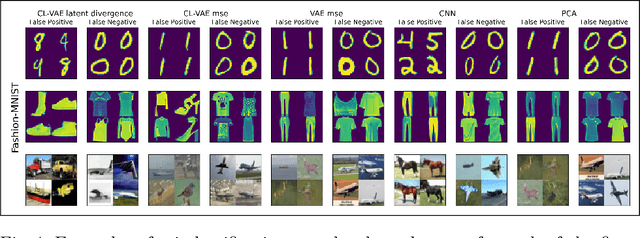

We propose a novel Conditional Latent space Variational Autoencoder (CL-VAE) to perform improved pre-processing for anomaly detection on data with known inlier classes and unknown outlier classes. This proposed variational autoencoder (VAE) improves latent space separation by conditioning on information within the data. The method fits a unique prior distribution to each class in the dataset, effectively expanding the classic prior distribution for VAEs to include a Gaussian mixture model. An ensemble of these VAEs are merged in the latent spaces to form a group consensus that greatly improves the accuracy of anomaly detection across data sets. Our approach is compared against the capabilities of a typical VAE, a CNN, and a PCA, with regards AUC for anomaly detection. The proposed model shows increased accuracy in anomaly detection, achieving an AUC of 97.4% on the MNIST dataset compared to 95.7% for the second best model. In addition, the CL-VAE shows increased benefits from ensembling, a more interpretable latent space, and an increased ability to learn patterns in complex data with limited model sizes.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge