Hindsight Network Credit Assignment

Paper and Code

Nov 24, 2020

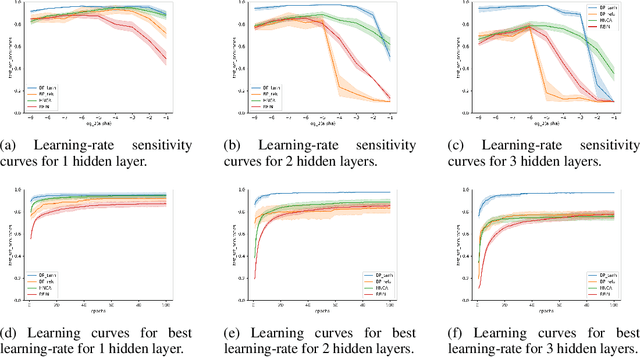

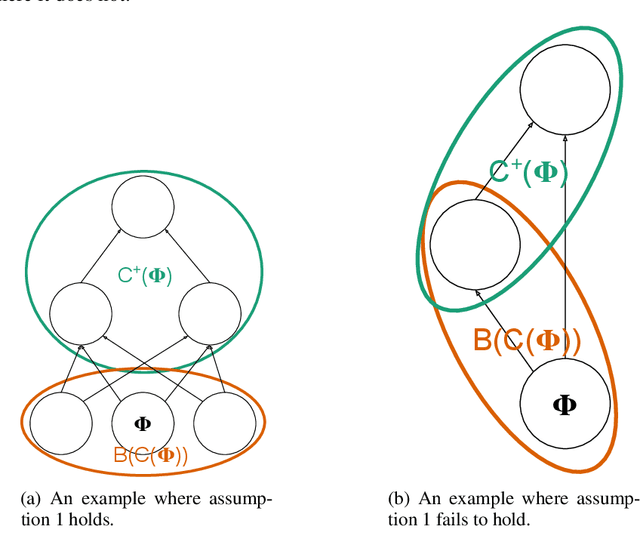

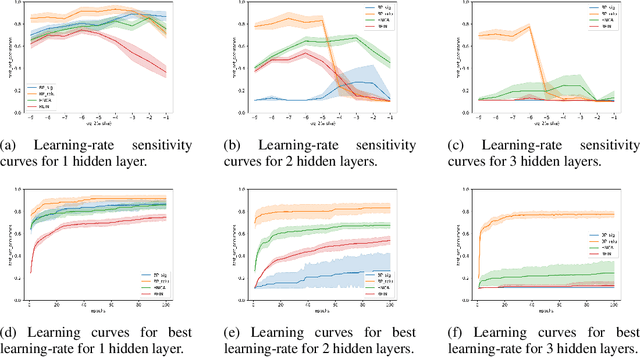

We present Hindsight Network Credit Assignment (HNCA), a novel learning method for stochastic neural networks, which works by assigning credit to each neuron's stochastic output based on how it influences the output of its immediate children in the network. We prove that HNCA provides unbiased gradient estimates while reducing variance compared to the REINFORCE estimator. We also experimentally demonstrate the advantage of HNCA over REINFORCE in a contextual bandit version of MNIST. The computational complexity of HNCA is similar to that of backpropagation. We believe that HNCA can help stimulate new ways of thinking about credit assignment in stochastic compute graphs.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge