Hierarchical Approaches for Reinforcement Learning in Parameterized Action Space

Paper and Code

Oct 23, 2018

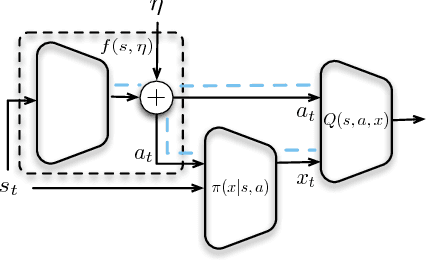

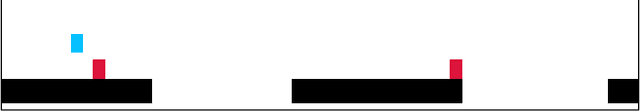

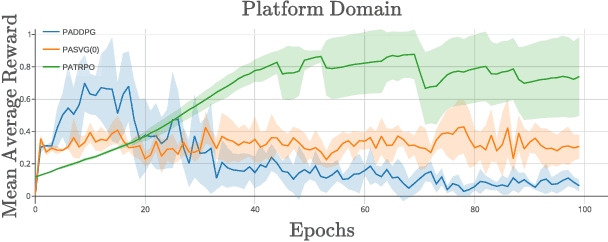

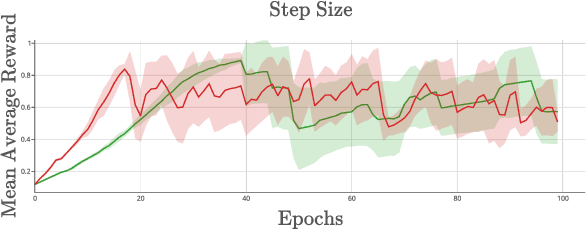

We explore Deep Reinforcement Learning in a parameterized action space. Specifically, we investigate how to achieve sample-efficient end-to-end training in these tasks. We propose a new compact architecture for the tasks where the parameter policy is conditioned on the output of the discrete action policy. We also propose two new methods based on the state-of-the-art algorithms Trust Region Policy Optimization (TRPO) and Stochastic Value Gradient (SVG) to train such an architecture. We demonstrate that these methods outperform the state of the art method, Parameterized Action DDPG, on test domains.

* Accepted in AAAI 18 Spring Symposium

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge