Head Pose Estimation and 3D Neural Surface Reconstruction via Monocular Camera in situ for Navigation and Safe Insertion into Natural Openings

Paper and Code

Jun 18, 2024

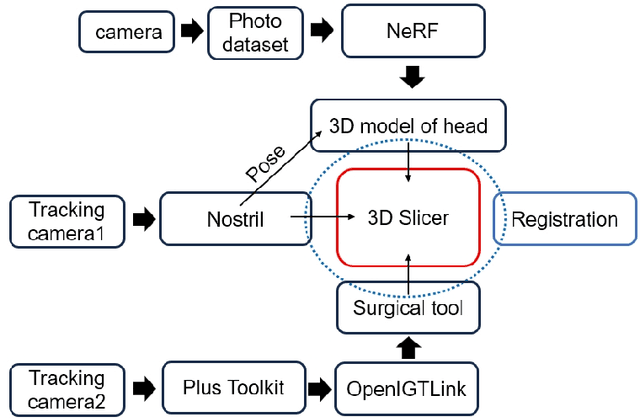

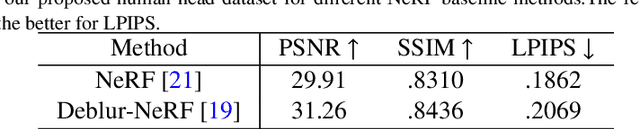

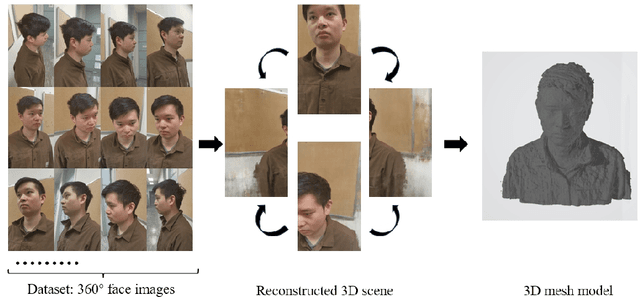

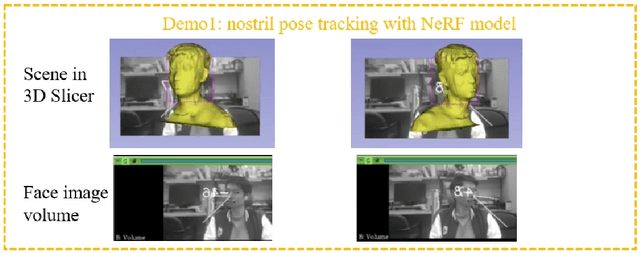

As the significance of simulation in medical care and intervention continues to grow, it is anticipated that a simplified and low-cost platform can be set up to execute personalized diagnoses and treatments. 3D Slicer can not only perform medical image analysis and visualization but can also provide surgical navigation and surgical planning functions. In this paper, we have chosen 3D Slicer as our base platform and monocular cameras are used as sensors. Then, We used the neural radiance fields (NeRF) algorithm to complete the 3D model reconstruction of the human head. We compared the accuracy of the NeRF algorithm in generating 3D human head scenes and utilized the MarchingCube algorithm to generate corresponding 3D mesh models. The individual's head pose, obtained through single-camera vision, is transmitted in real-time to the scene created within 3D Slicer. The demonstrations presented in this paper include real-time synchronization of transformations between the human head model in the 3D Slicer scene and the detected head posture. Additionally, we tested a scene where a tool, marked with an ArUco Maker tracked by a single camera, synchronously points to the real-time transformation of the head posture. These demos indicate that our methodology can provide a feasible real-time simulation platform for nasopharyngeal swab collection or intubation.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge