Generative Adversarial Method Based on Neural Tangent Kernels

Paper and Code

Apr 11, 2022

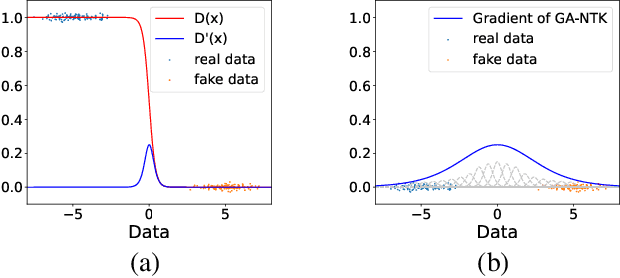

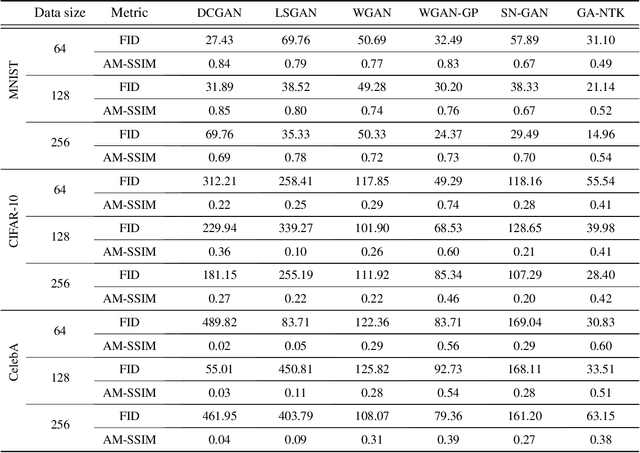

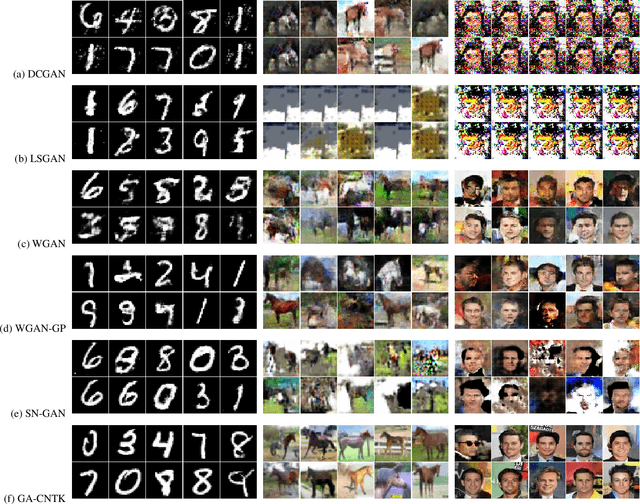

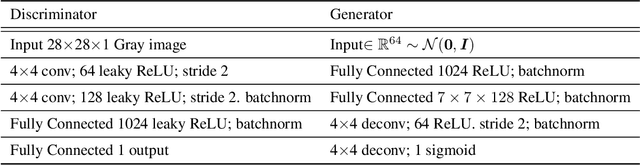

The recent development of Generative adversarial networks (GANs) has driven many computer vision applications. Despite the great synthesis quality, training GANs often confronts several issues, including non-convergence, mode collapse, and gradient vanishing. There exist several workarounds, for example, regularizing Lipschitz continuity and adopting Wasserstein distance. Although these methods can partially solve the problems, we argue that the problems are result from modeling the discriminator with deep neural networks. In this paper, we base on newly derived deep neural network theories called Neural Tangent Kernel (NTK) and propose a new generative algorithm called generative adversarial NTK (GA-NTK). The GA-NTK models the discriminator as a Gaussian Process (GP). With the help of the NTK theories, the training dynamics of GA-NTK can be described with a closed-form formula. To synthesize data with the closed-form formula, the objectives can be simplified into a single-level adversarial optimization problem. We conduct extensive experiments on real-world datasets, and the results show that GA-NTK can generate images comparable to those by GANs but is much easier to train under various conditions. We also study the current limitations of GA-NTK and propose some workarounds to make GA-NTK more practical.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge