Free-body Gesture Tracking and Augmented Reality Improvisation for Floor and Aerial Dance

Paper and Code

Sep 15, 2015

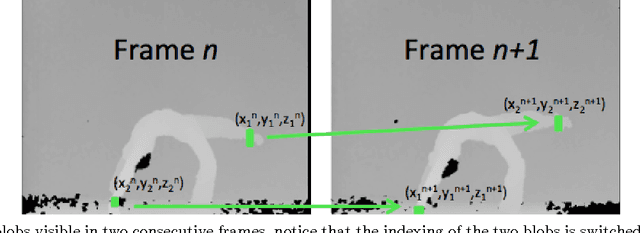

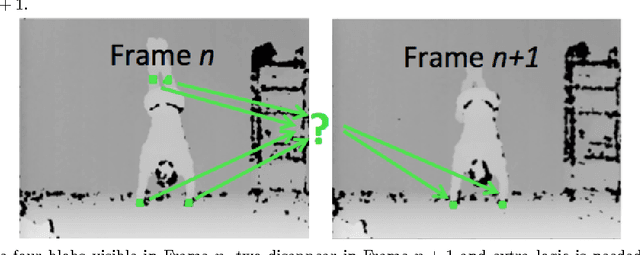

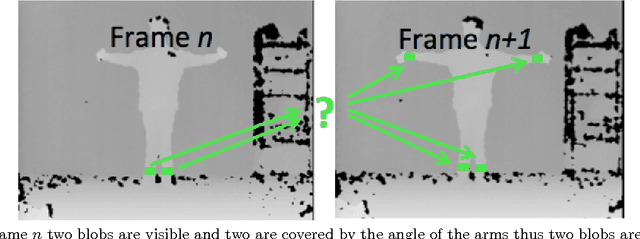

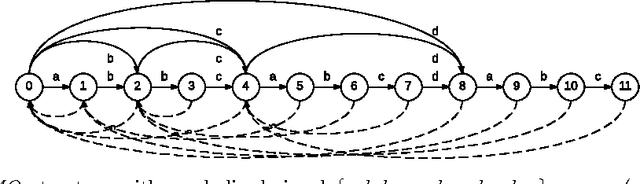

This paper describes an updated interactive performance system for floor and Aerial Dance that controls visual and sonic aspects of the presentation via a depth sensing camera (MS Kinect). In order to detect, measure and track free movement in space, 3 degree of freedom (3-DOF) tracking in space (on the ground and in the air) is performed using IR markers with a method for multi target tracking capabilities added and described in detail. An improved gesture tracking and recognition system, called Action Graph (AG), is described in the paper. Action Graph uses an efficient incremental construction from a single long sequence of movement features and automatically captures repeated sub-segments in the movement from start to finish with no manual interaction needed with other advanced capabilities discussed as well. By using the new model for the gesture we can unify an entire choreography piece by dynamically tracking and recognizing gestures and sub-portions of the piece. This gives the performer the freedom to improvise based on a set of recorded gestures/portions of the choreography and have the system dynamically respond in relation to the performer within a set of related rehearsed actions, an ability that has not been seen in any other system to date.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge