Faster Projection-Free Augmented Lagrangian Methods via Weak Proximal Oracle

Paper and Code

Oct 25, 2022

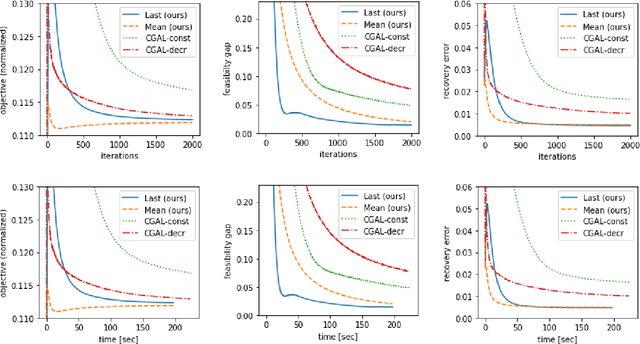

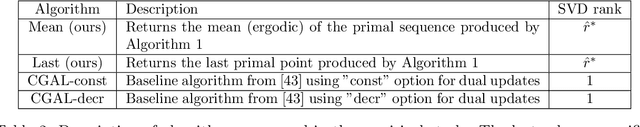

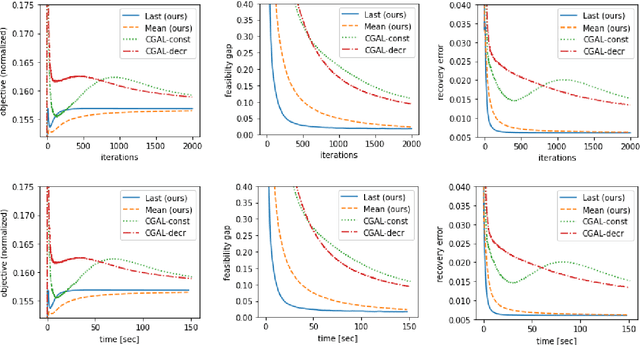

This paper considers a convex composite optimization problem with affine constraints, which includes problems that take the form of minimizing a smooth convex objective function over the intersection of (simple) convex sets, or regularized with multiple (simple) functions. Motivated by high-dimensional applications in which exact projection/proximal computations are not tractable, we propose a \textit{projection-free} augmented Lagrangian-based method, in which primal updates are carried out using a \textit{weak proximal oracle} (WPO). In an earlier work, WPO was shown to be more powerful than the standard \textit{linear minimization oracle} (LMO) that underlies conditional gradient-based methods (aka Frank-Wolfe methods). Moreover, WPO is computationally tractable for many high-dimensional problems of interest, including those motivated by recovery of low-rank matrices and tensors, and optimization over polytopes which admit efficient LMOs. The main result of this paper shows that under a certain curvature assumption (which is weaker than strong convexity), our WPO-based algorithm achieves an ergodic rate of convergence of $O(1/T)$ for both the objective residual and feasibility gap. This result, to the best of our knowledge, improves upon the $O(1/\sqrt{T})$ rate for existing LMO-based projection-free methods for this class of problems. Empirical experiments on a low-rank and sparse covariance matrix estimation task and the Max Cut semidefinite relaxation demonstrate the superiority of our method over state-of-the-art LMO-based Lagrangian-based methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge