Factor Graph-Based Smoothing Without Matrix Inversion for Highly Precise Localization

Paper and Code

Sep 22, 2020

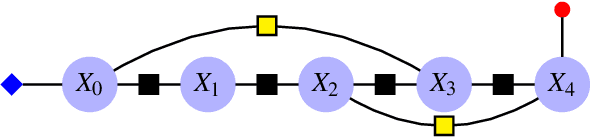

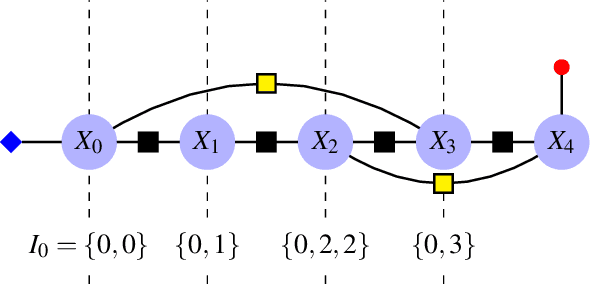

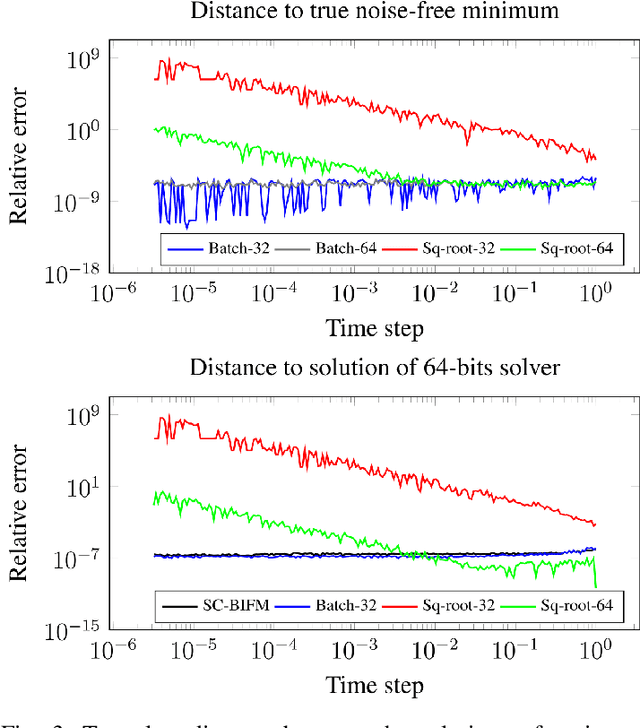

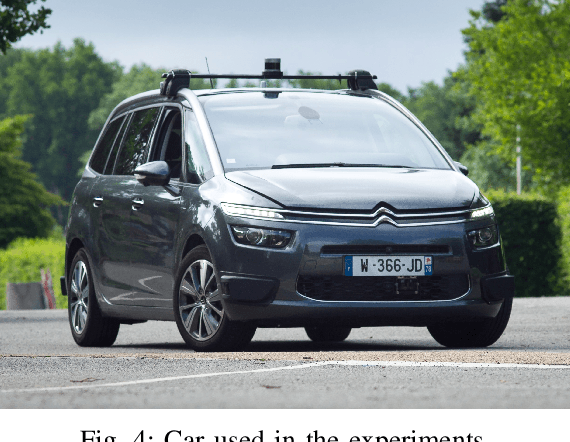

We consider the problem of localizing a manned, semi-autonomous, or autonomous vehicle in the environment using information coming from the vehicle's sensors, a problem known as navigation or simultaneous localization and mapping (SLAM) depending on the context. To infer knowledge from sensors' measurements, while drawing on a priori knowledge about the vehicle's dynamics, modern approaches solve an optimization problem to compute the most likely trajectory given all past observations, an approach known as smoothing. Improving smoothing solvers is an active field of research in the SLAM community. Most work is focused on reducing computation load by inverting the involved linear system while preserving its sparsity. The present paper raises an issue which, to the knowledge of the authors, has not been addressed yet: standard smoothing solvers require explicitly using the inverse of sensor noise covariance matrices. This means the parameters that reflect the noise magnitude must be sufficiently large for the smoother to properly function. When matrices are close to singular, which is the case when using high precision modern inertial measurement units (IMU), numerical issues necessarily arise, especially with 32-bits implementation demanded by most industrial aerospace applications. We discuss these issues and propose a solution that builds upon the Kalman filter to improve smoothing algorithms. We then leverage the results to devise a localization algorithm based on fusion of IMU and vision sensors. Successful real experiments using an actual car equipped with a tactical grade high performance IMU and a LiDAR illustrate the relevance of the approach to the field of autonomous vehicles.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge