Extreme sparsity gives rise to functional specialization

Paper and Code

Jun 04, 2021

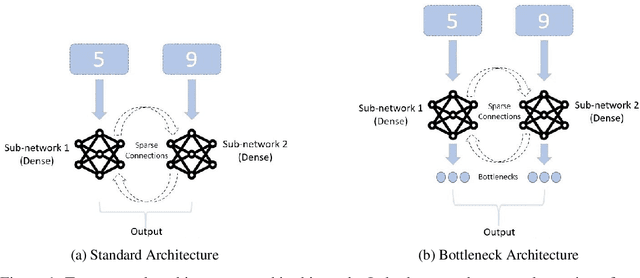

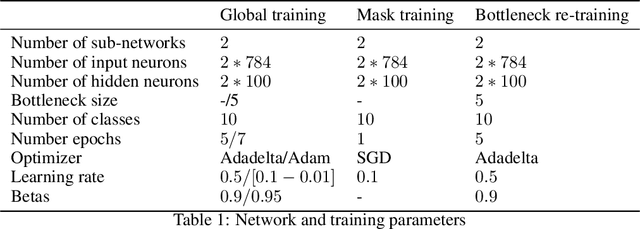

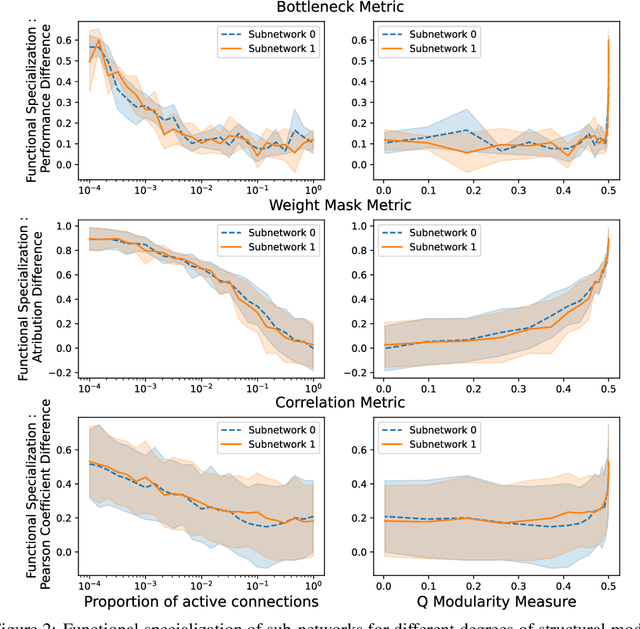

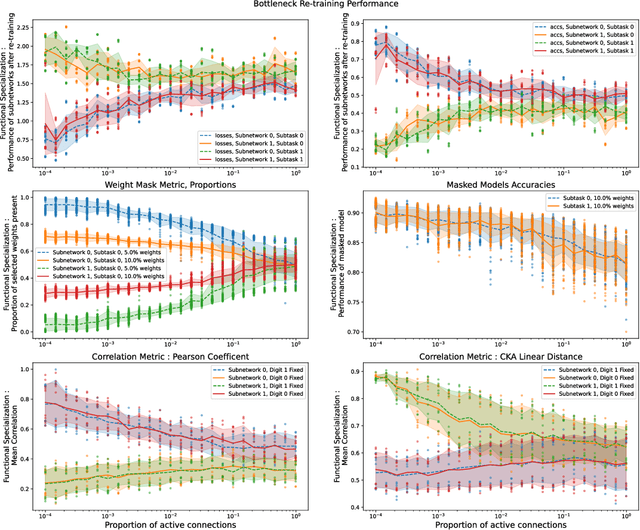

Modularity of neural networks -- both biological and artificial -- can be thought of either structurally or functionally, and the relationship between these is an open question. We show that enforcing structural modularity via sparse connectivity between two dense sub-networks which need to communicate to solve the task leads to functional specialization of the sub-networks, but only at extreme levels of sparsity. With even a moderate number of interconnections, the sub-networks become functionally entangled. Defining functional specialization is in itself a challenging problem without a universally agreed solution. To address this, we designed three different measures of specialization (based on weight masks, retraining and correlation) and found them to qualitatively agree. Our results have implications in both neuroscience and machine learning. For neuroscience, it shows that we cannot conclude that there is functional modularity simply by observing moderate levels of structural modularity: knowing the brain's connectome is not sufficient for understanding how it breaks down into functional modules. For machine learning, using structure to promote functional modularity -- which may be important for robustness and generalization -- may require extremely narrow bottlenecks between modules.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge