Extend natural neighbor: a novel classification method with self-adaptive neighborhood parameters in different stages

Paper and Code

Dec 07, 2016

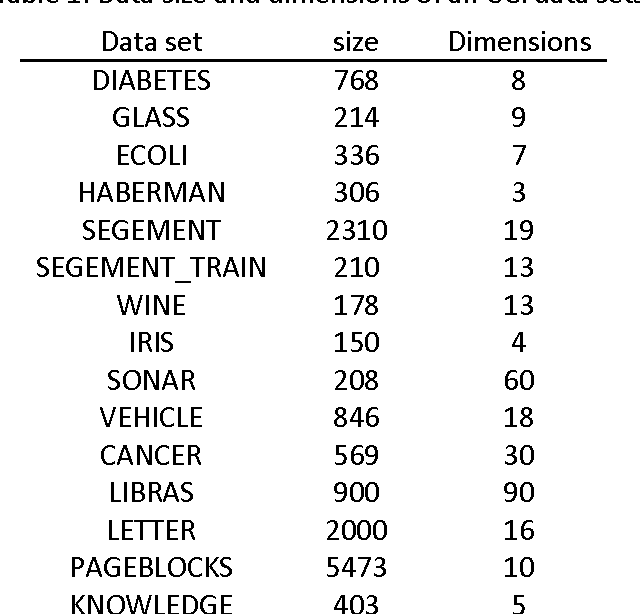

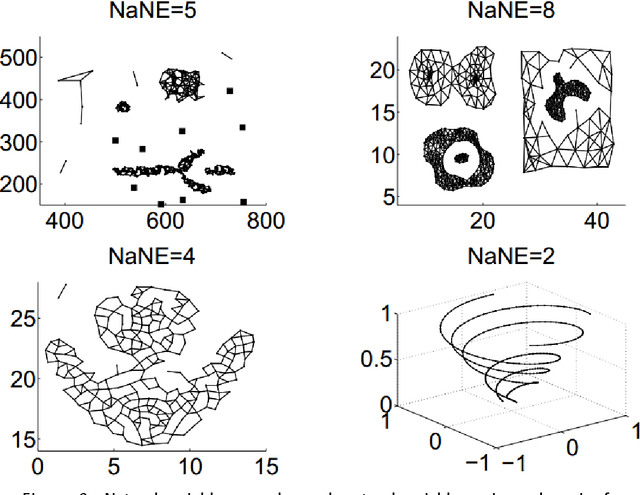

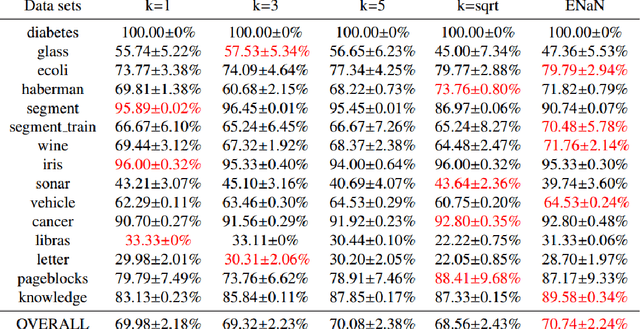

Various kinds of k-nearest neighbor (KNN) based classification methods are the bases of many well-established and high-performance pattern-recognition techniques, but both of them are vulnerable to their parameter choice. Essentially, the challenge is to detect the neighborhood of various data sets, while utterly ignorant of the data characteristic. This article introduces a new supervised classification method: the extend natural neighbor (ENaN) method, and shows that it provides a better classification result without choosing the neighborhood parameter artificially. Unlike the original KNN based method which needs a prior k, the ENaNE method predicts different k in different stages. Therefore, the ENaNE method is able to learn more from flexible neighbor information both in training stage and testing stage, and provide a better classification result.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge