Exploring Wasserstein Distance across Concept Embeddings for Ontology Matching

Paper and Code

Jul 22, 2022

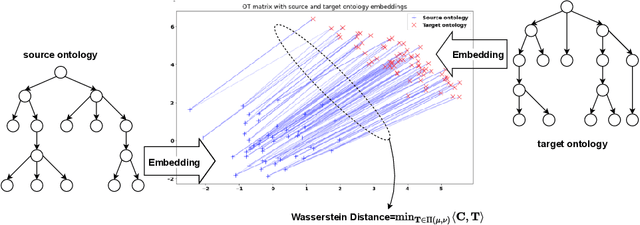

Measuring the distance between ontological elements is a fundamental component for any matching solutions. String-based distance metrics relying on discrete symbol operations are notorious for shallow syntactic matching. In this study, we explore Wasserstein distance metric across ontology concept embeddings. Wasserstein distance metric targets continuous space that can incorporate linguistic, structural, and logical information. In our exploratory study, we use a pre-trained word embeddings system, fasttext, to embed ontology element labels. We examine the effectiveness of Wasserstein distance for measuring similarity between (blocks of) ontolgoies, discovering matchings between individual elements, and refining matchings incorporating contextual information. Our experiments with the OAEI conference track and MSE benchmarks achieve competitive results compared to the leading systems such as AML and LogMap. Results indicate a promising trajectory for the application of optimal transport and Wasserstein distance to improve embedding-based unsupervised ontology matchings.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge