Exploring Advances in Transformers and CNN for Skin Lesion Diagnosis on Small Datasets

Paper and Code

May 30, 2022

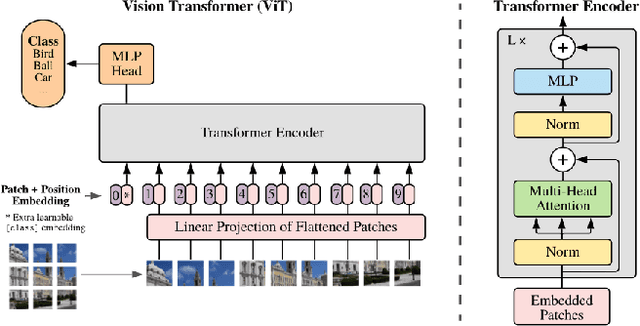

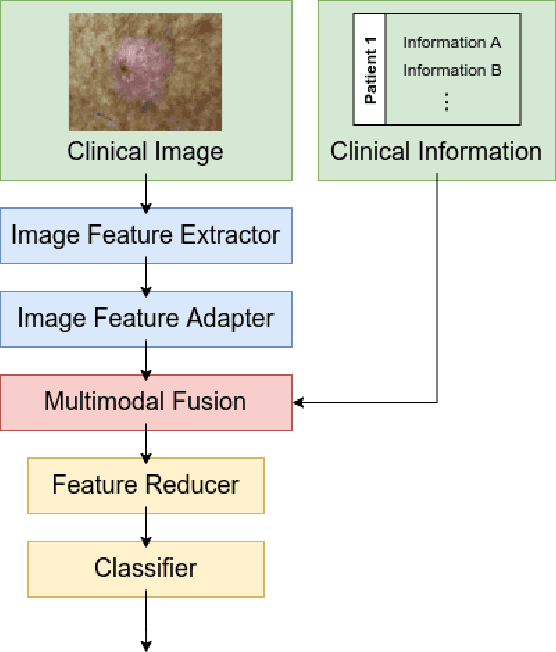

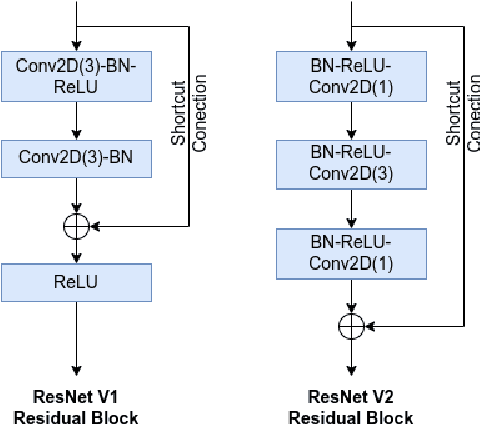

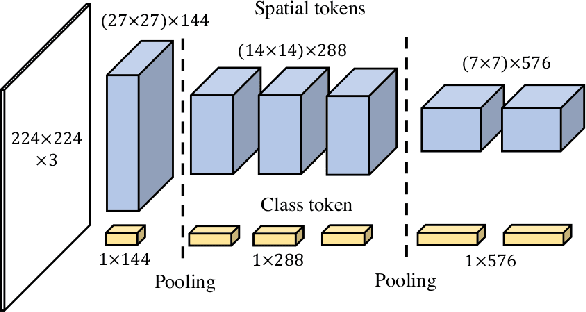

Skin cancer is one of the most common types of cancer in the world. Different computer-aided diagnosis systems have been proposed to tackle skin lesion diagnosis, most of them based in deep convolutional neural networks. However, recent advances in computer vision achieved state-of-art results in many tasks, notably Transformer-based networks. We explore and evaluate advances in computer vision architectures, training methods and multimodal feature fusion for skin lesion diagnosis task. Experiments show that PiT ($0.800 \pm 0.006$), CoaT ($0.780 \pm 0.024$) and ViT ($0.771 \pm 0.018$) backbone models with MetaBlock fusion achieved state-of-art results for balanced accuracy metric in PAD-UFES-20 dataset.

* 7 pages

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge