Evaluating the fairness of fine-tuning strategies in self-supervised learning

Paper and Code

Oct 01, 2021

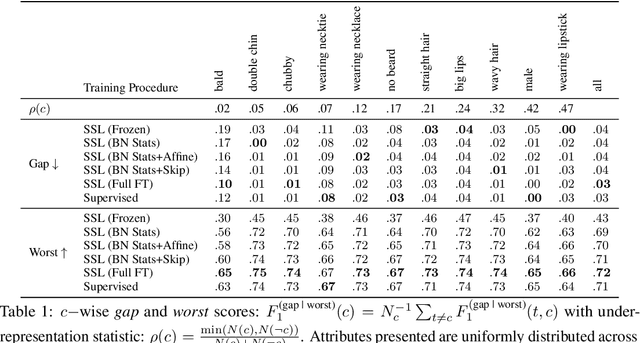

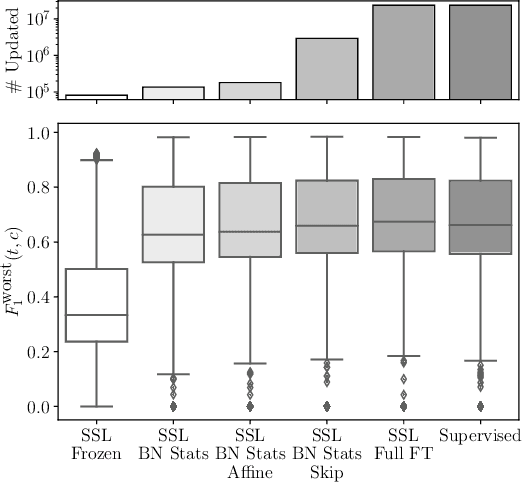

In this work we examine how fine-tuning impacts the fairness of contrastive Self-Supervised Learning (SSL) models. Our findings indicate that Batch Normalization (BN) statistics play a crucial role, and that updating only the BN statistics of a pre-trained SSL backbone improves its downstream fairness (36% worst subgroup, 25% mean subgroup gap). This procedure is competitive with supervised learning, while taking 4.4x less time to train and requiring only 0.35% as many parameters to be updated. Finally, inspired by recent work in supervised learning, we find that updating BN statistics and training residual skip connections (12.3% of the parameters) achieves parity with a fully fine-tuned model, while taking 1.33x less time to train.

* Accepted to BayLearn 2021

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge