Estimating Random Delays in Modbus Network Using Experiments and General Linear Regression Neural Networks with Genetic Algorithm Smoothing

Paper and Code

Sep 21, 2015

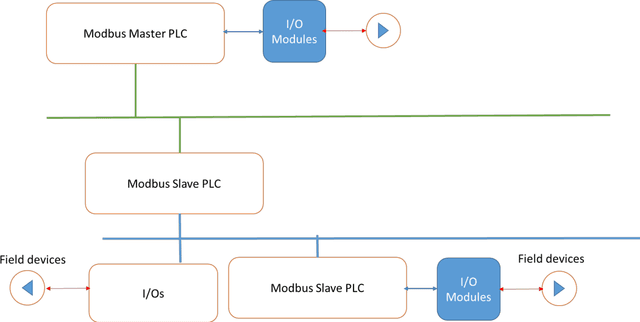

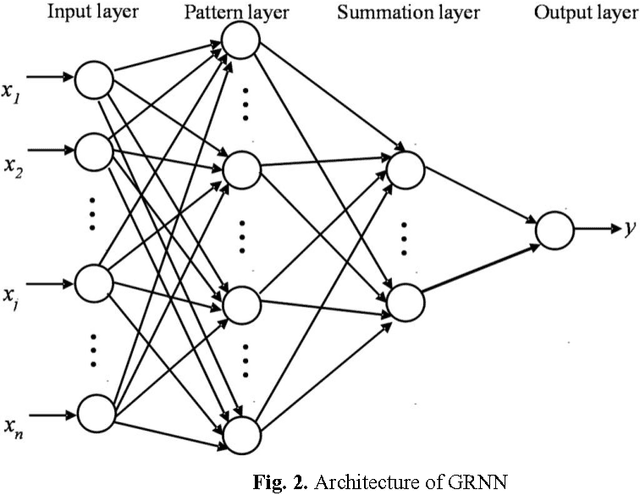

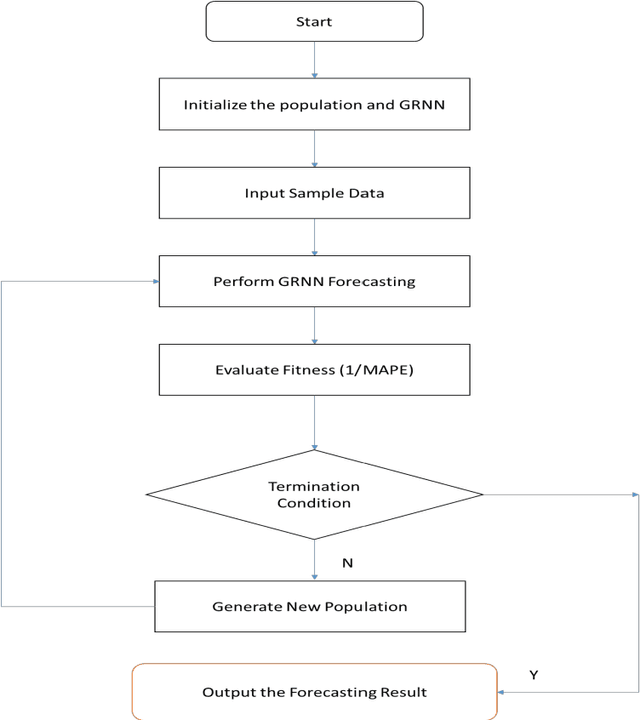

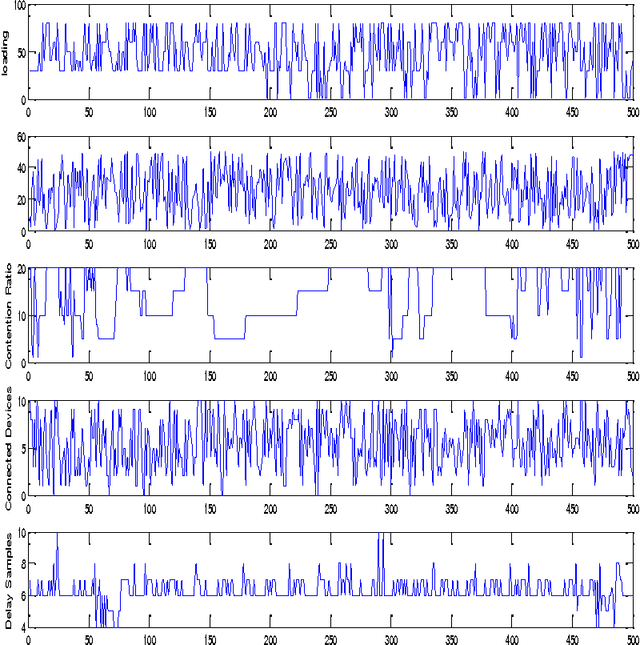

Time-varying delays adversely affect the performance of networked control sys-tems (NCS) and in the worst-case can destabilize the entire system. Therefore, modelling network delays is important for designing NCS. However, modelling time-varying delays is challenging because of their dependence on multiple pa-rameters such as length, contention, connected devices, protocol employed, and channel loading. Further, these multiple parameters are inherently random and de-lays vary in a non-linear fashion with respect to time. This makes estimating ran-dom delays challenging. This investigation presents a methodology to model de-lays in NCS using experiments and general regression neural network (GRNN) due to their ability to capture non-linear relationship. To compute the optimal smoothing parameter that computes the best estimates, genetic algorithm is used. The objective of the genetic algorithm is to compute the optimal smoothing pa-rameter that minimizes the mean absolute percentage error (MAPE). Our results illustrate that the resulting GRNN is able to predict the delays with less than 3% error. The proposed delay model gives a framework to design compensation schemes for NCS subjected to time-varying delays.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge