Endmember Extraction Algorithms Fusing for Hyperspectral Unmixing

Paper and Code

Feb 06, 2024

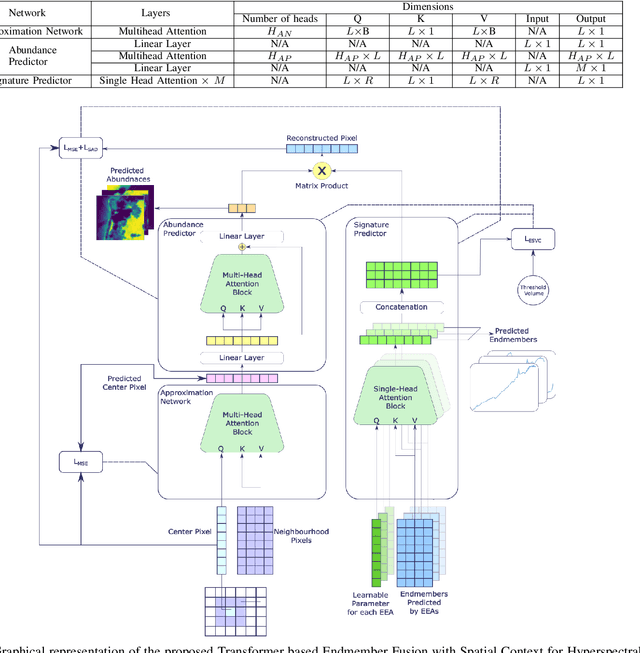

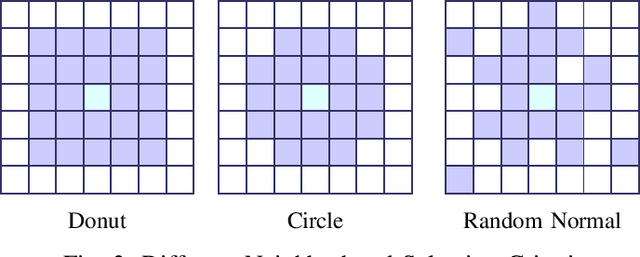

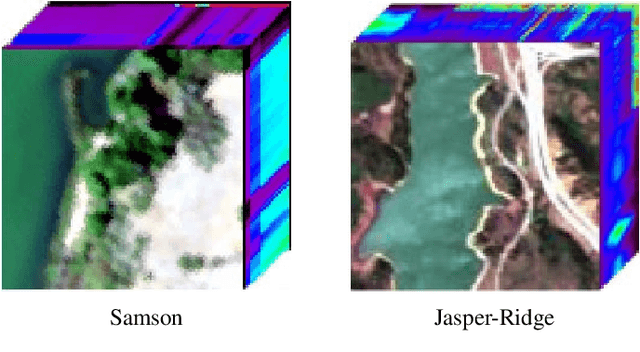

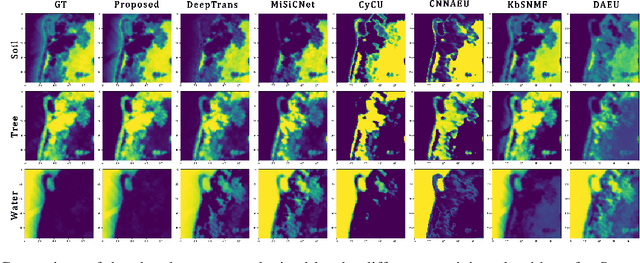

In recent years, transformer-based deep learning networks have gained popularity in Hyper-spectral (HS) unmixing applications due to their superior performance. The attention mechanism within transformers facilitates input-dependent weighting and enhances contextual awareness during training. Drawing inspiration from this, we propose a novel attention-based Hyperspectral Unmixing algorithm called Fusion (Fusion). This algorithm can effectively fuse endmember signatures obtained from different endmember extraction algorithms, surpassing the limitations of classical HS Unmixing approaches that rely on a single Endmember Extraction Algorithm (EEA). The Fusion network incorporates an Approximation Network (AN), introducing contextual awareness into abundance prediction by considering neighborhood pixels. Unlike Convolutional Neural Networks (CNNs), which are constrained by specific kernel shapes, the Fusion network offers flexibility in choosing any arbitrary configuration of the neighborhood. We conducted a comparative analysis between the Fusion algorithm and state-of-the-art algorithms using two popular datasets. Remarkably, Fusion outperformed other algorithms, achieving the lowest Root Mean Square Error (RMSE) for abundance predictions and competitive Spectral Angle Distance (SAD) for signatures associated with each endmember for both datasets.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge