Effective General-Domain Data Inclusion for the Machine Translation Task by Vanilla Transformers

Paper and Code

Sep 28, 2022

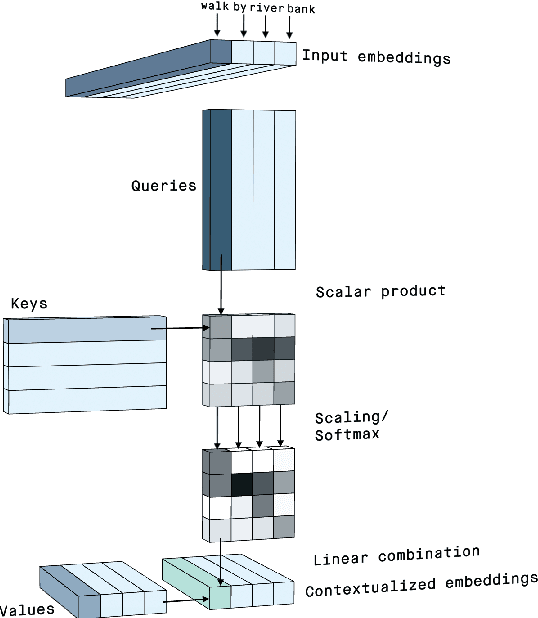

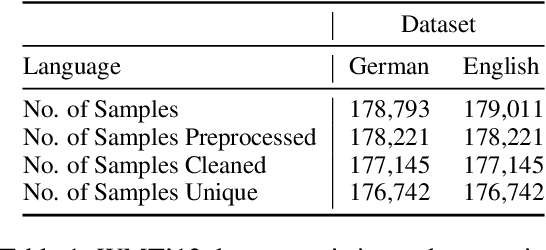

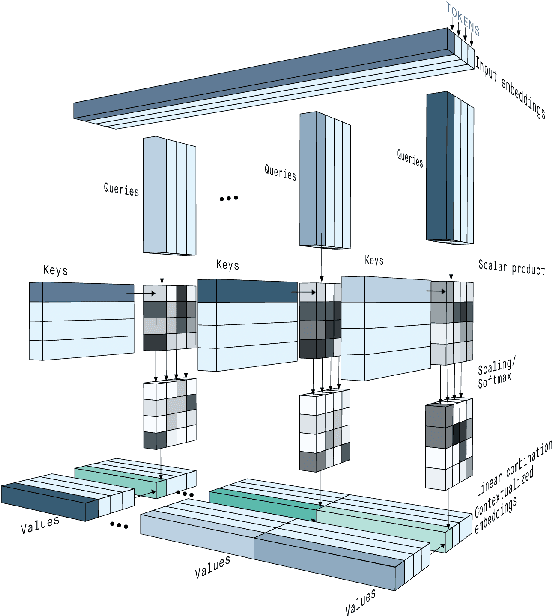

One of the vital breakthroughs in the history of machine translation is the development of the Transformer model. Not only it is revolutionary for various translation tasks, but also for a majority of other NLP tasks. In this paper, we aim at a Transformer-based system that is able to translate a source sentence in German to its counterpart target sentence in English. We perform the experiments on the news commentary German-English parallel sentences from the WMT'13 dataset. In addition, we investigate the effect of the inclusion of additional general-domain data in training from the IWSLT'16 dataset to improve the Transformer model performance. We find that including the IWSLT'16 dataset in training helps achieve a gain of 2 BLEU score points on the test set of the WMT'13 dataset. Qualitative analysis is introduced to analyze how the usage of general-domain data helps improve the quality of the produced translation sentences.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge