Differential Evolution-based Neural Network Training Incorporating a Centroid-based Strategy and Dynamic Opposition-based Learning

Paper and Code

Jun 29, 2021

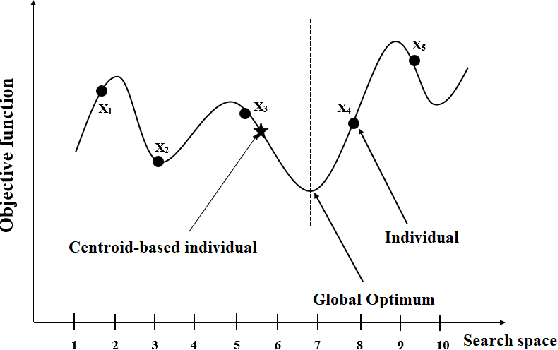

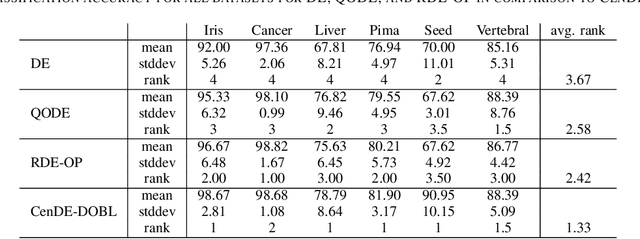

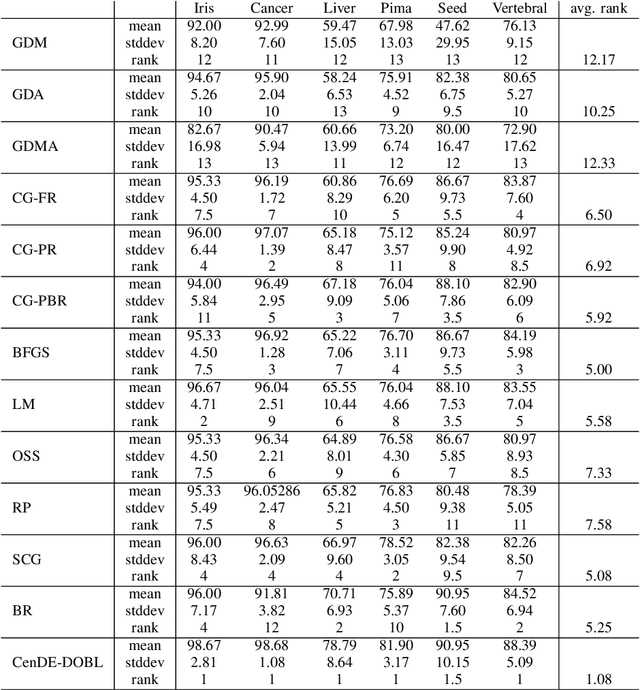

Training multi-layer neural networks (MLNNs), a challenging task, involves finding appropriate weights and biases. MLNN training is important since the performance of MLNNs is mainly dependent on these network parameters. However, conventional algorithms such as gradient-based methods, while extensively used for MLNN training, suffer from drawbacks such as a tendency to getting stuck in local optima. Population-based metaheuristic algorithms can be used to overcome these problems. In this paper, we propose a novel MLNN training algorithm, CenDE-DOBL, that is based on differential evolution (DE), a centroid-based strategy (Cen-S), and dynamic opposition-based learning (DOBL). The Cen-S approach employs the centroid of the best individuals as a member of population, while other members are updated using standard crossover and mutation operators. This improves exploitation since the new member is obtained based on the best individuals, while the employed DOBL strategy, which uses the opposite of an individual, leads to enhanced exploration. Our extensive experiments compare CenDE-DOBL to 26 conventional and population-based algorithms and confirm it to provide excellent MLNN training performance.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge