Designing generalisation evaluation function through human-machine dialogue

Paper and Code

Apr 19, 2012

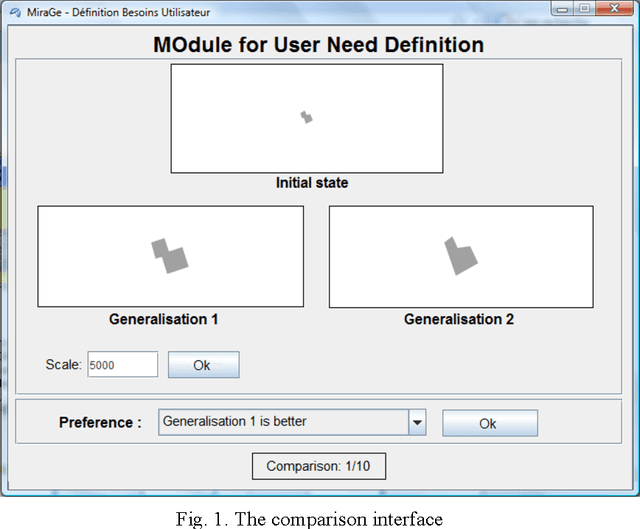

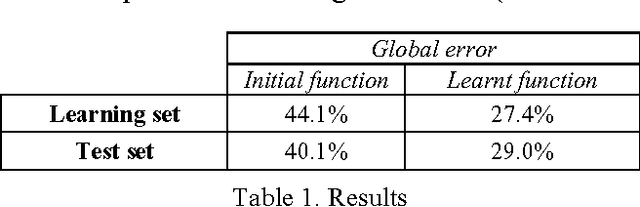

Automated generalisation has known important improvements these last few years. However, an issue that still deserves more study concerns the automatic evaluation of generalised data. Indeed, many automated generalisation systems require the utilisation of an evaluation function to automatically assess generalisation outcomes. In this paper, we propose a new approach dedicated to the design of such a function. This approach allows an imperfectly defined evaluation function to be revised through a man-machine dialogue. The user gives its preferences to the system by comparing generalisation outcomes. Machine Learning techniques are then used to improve the evaluation function. An experiment carried out on buildings shows that our approach significantly improves generalisation evaluation functions defined by users.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge