Designing Explanations for Group Recommender Systems

Paper and Code

Feb 24, 2021

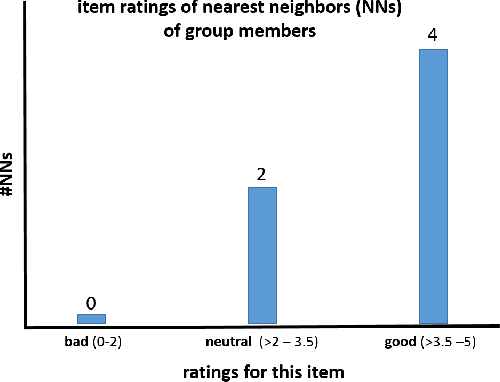

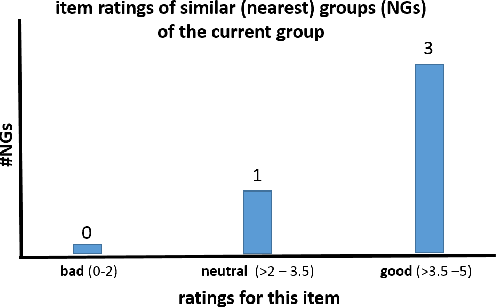

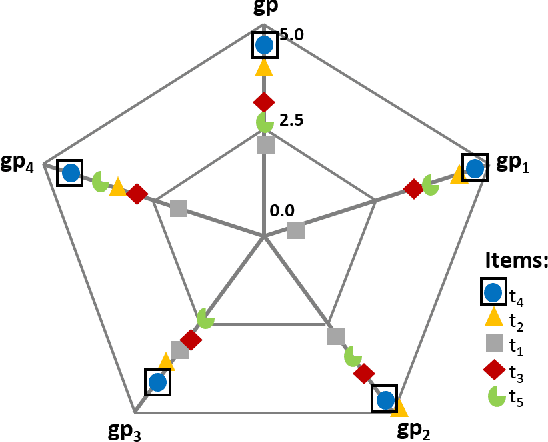

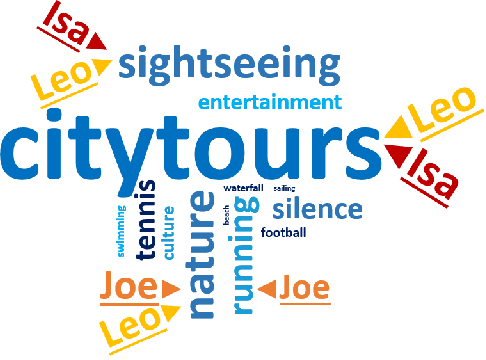

Explanations are used in recommender systems for various reasons. Users have to be supported in making (high-quality) decisions more quickly. Developers of recommender systems want to convince users to purchase specific items. Users should better understand how the recommender system works and why a specific item has been recommended. Users should also develop a more in-depth understanding of the item domain. Consequently, explanations are designed in order to achieve specific \emph{goals} such as increasing the transparency of a recommendation or increasing a user's trust in the recommender system. In this paper, we provide an overview of existing research related to explanations in recommender systems, and specifically discuss aspects relevant to group recommendation scenarios. In this context, we present different ways of explaining and visualizing recommendations determined on the basis of preference aggregation strategies.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge