Depth Separations in Neural Networks: What is Actually Being Separated?

Paper and Code

May 26, 2019

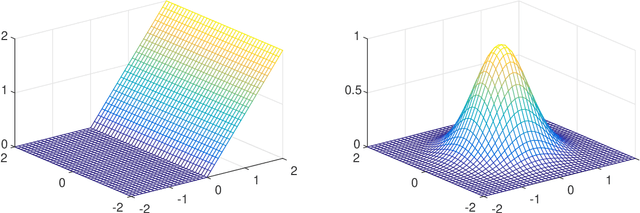

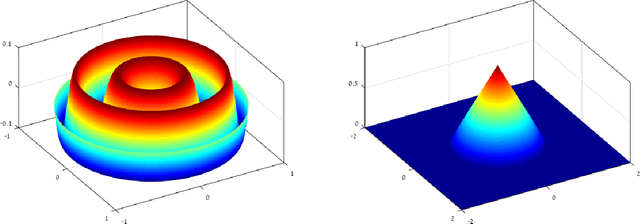

Existing depth separation results for constant-depth networks essentially show that certain radial functions in $\mathbb{R}^d$, which can be easily approximated with depth $3$ networks, cannot be approximated by depth $2$ networks, even up to constant accuracy, unless their size is exponential in $d$. However, the functions used to demonstrate this are rapidly oscillating, with a Lipschitz parameter scaling polynomially with the dimension $d$ (or equivalently, by scaling the function, the hardness result applies to $\mathcal{O}(1)$-Lipschitz functions only when the target accuracy $\epsilon$ is at most $\text{poly}(1/d)$). In this paper, we study whether such depth separations might still hold in the natural setting of $\mathcal{O}(1)$-Lipschitz radial functions, when $\epsilon$ does not scale with $d$. Perhaps surprisingly, we show that the answer is negative: In contrast to the intuition suggested by previous work, it \emph{is} possible to approximate $\mathcal{O}(1)$-Lipschitz radial functions with depth $2$, size $\text{poly}(d)$ networks, for every constant $\epsilon$. We complement it by showing that approximating such functions is also possible with depth $2$, size $\text{poly}(1/\epsilon)$ networks, for every constant $d$. Finally, we show that it is not possible to have polynomial dependence in both $d,1/\epsilon$ simultaneously. Overall, our results indicate that in order to show depth separations for expressing $\mathcal{O}(1)$-Lipschitz functions with constant accuracy -- if at all possible -- one would need fundamentally different techniques than existing ones in the literature.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge