Deep-Learning-Based Device Fingerprinting for Increased LoRa-IoT Security: Sensitivity to Network Deployment Changes

Paper and Code

Aug 31, 2022

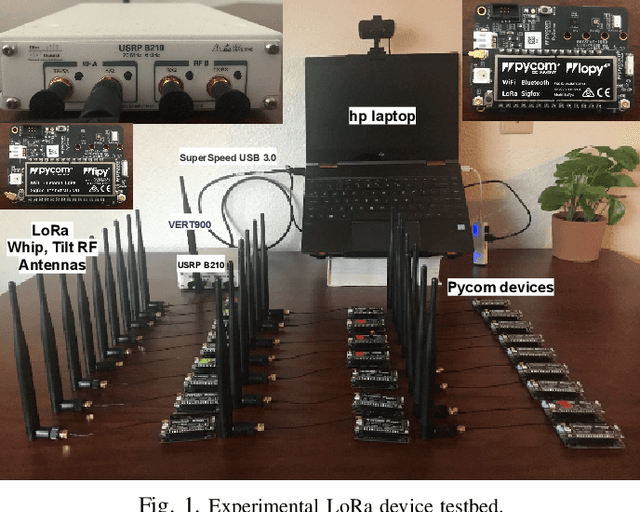

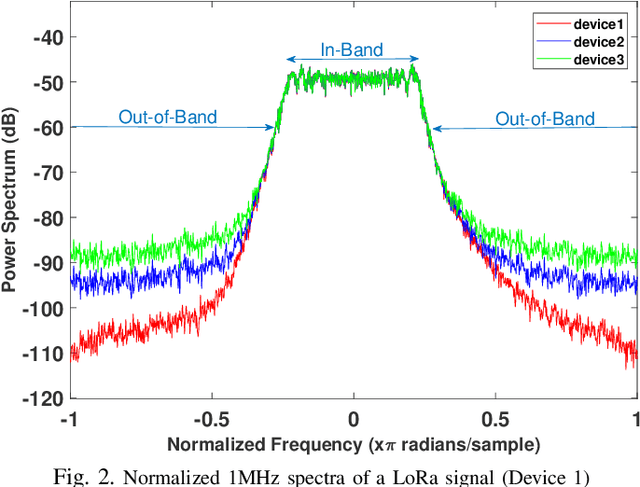

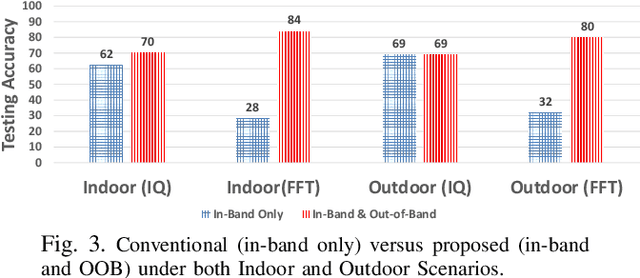

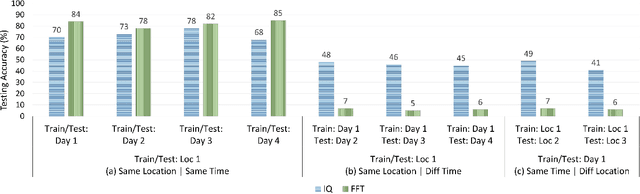

Deep-learning-based device fingerprinting has recently been recognized as a key enabler for automated network access authentication. Its robustness to impersonation attacks due to the inherent difficulty of replicating physical features is what distinguishes it from conventional cryptographic solutions. Although device fingerprinting has shown promising performances, its sensitivity to changes in the network operating environment still poses a major limitation. This paper presents an experimental framework that aims to study and overcome the sensitivity of LoRa-enabled device fingerprinting to such changes. We first begin by describing RF datasets we collected using our LoRa-enabled wireless device testbed. We then propose a new fingerprinting technique that exploits out-of-band distortion information caused by hardware impairments to increase the fingerprinting accuracy. Finally, we experimentally study and analyze the sensitivity of LoRa RF fingerprinting to various network setting changes. Our results show that fingerprinting does relatively well when the learning models are trained and tested under the same settings. However, when trained and tested under different settings, these models exhibit moderate sensitivity to channel condition changes and severe sensitivity to protocol configuration and receiver hardware changes when IQ data is used as input. However, with FFT data is used as input, they perform poorly under any change.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge