Deep Incremental Model Based Reinforcement Learning: A One-Step Lookback Approach for Continuous Robotics Control

Paper and Code

Mar 03, 2024

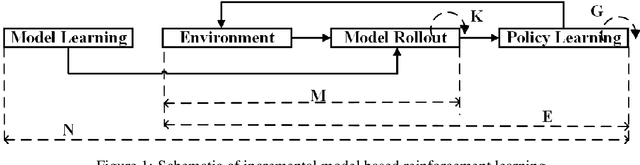

Model-based reinforcement learning (MBRL) attempts to use an available or a learned model to improve the data efficiency of reinforcement learning. This work proposes a one-step lookback approach that jointly learns the latent-space model and the policy to realize the sample-efficient continuous robotic control, wherein the control-theoretical knowledge is utilized to decrease the model learning difficulty. Specifically, the so-called one-step backward data is utilized to facilitate the incremental evolution model, an alternative structured representation of the robotics evolution model in the MBRL field. The incremental evolution model accurately predicts the robotics movement but with low sample complexity. This is because the formulated incremental evolution model degrades the model learning difficulty into a parametric matrix learning problem, which is especially favourable to high-dimensional robotics applications. The imagined data from the learned incremental evolution model is used to supplement training data to enhance the sample efficiency. Comparative numerical simulations on benchmark continuous robotics control problems are conducted to validate the efficiency of our proposed one-step lookback approach.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge