Deep Algorithmic Question Answering: Towards a Compositionally Hybrid AI for Algorithmic Reasoning

Paper and Code

Sep 29, 2021

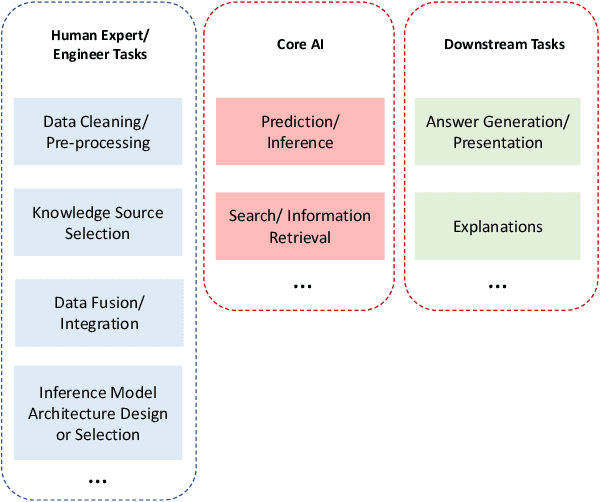

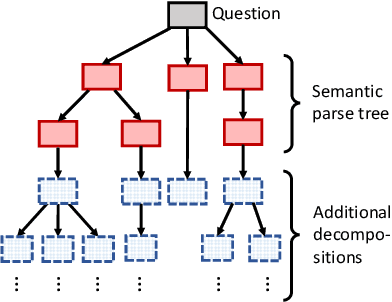

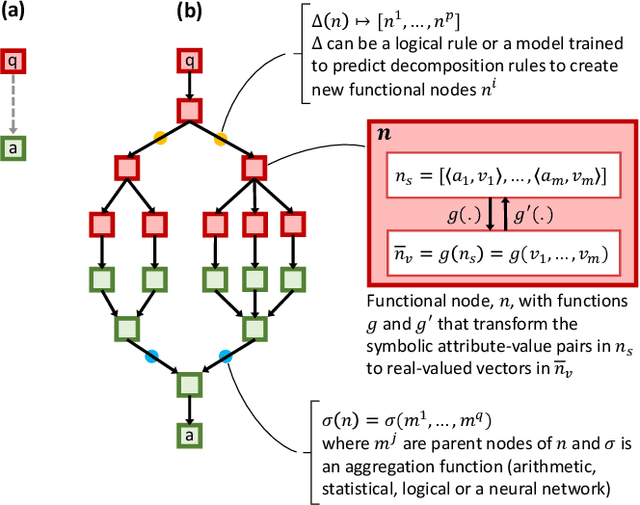

An important aspect of artificial intelligence (AI) is the ability to reason in a step-by-step "algorithmic" manner that can be inspected and verified for its correctness. This is especially important in the domain of question answering (QA). We argue that the challenge of algorithmic reasoning in QA can be effectively tackled with a "systems" approach to AI which features a hybrid use of symbolic and sub-symbolic methods including deep neural networks. Additionally, we argue that while neural network models with end-to-end training pipelines perform well in narrow applications such as image classification and language modelling, they cannot, on their own, successfully perform algorithmic reasoning, especially if the task spans multiple domains. We discuss a few notable exceptions and point out how they are still limited when the QA problem is widened to include other intelligence-requiring tasks. However, deep learning, and machine learning in general, do play important roles as components in the reasoning process. We propose an approach to algorithm reasoning for QA, Deep Algorithmic Question Answering (DAQA), based on three desirable properties: interpretability, generalizability and robustness which such an AI system should possess and conclude that they are best achieved with a combination of hybrid and compositional AI.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge