Dealing with the database variability problem in learning from medical data: an ensemble-based approach using convolutional neural networks and a case of study applied to automatic sleep scoring

Paper and Code

Jun 16, 2019

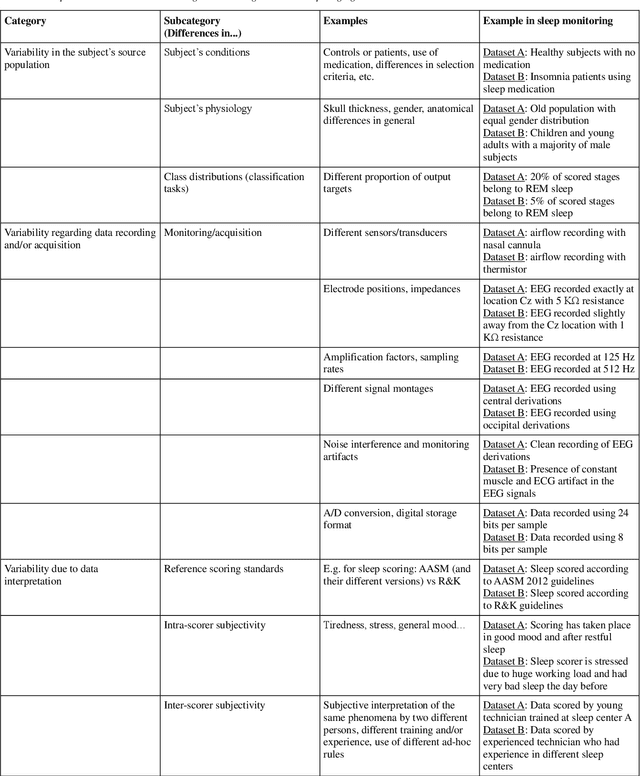

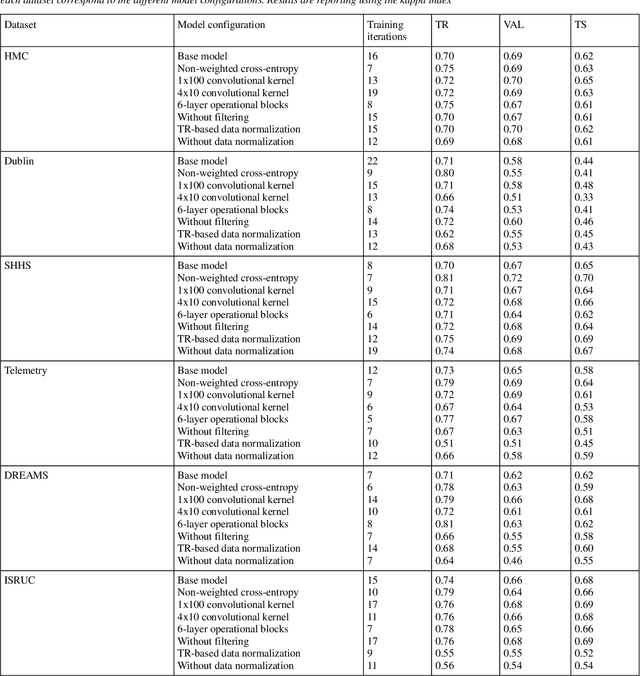

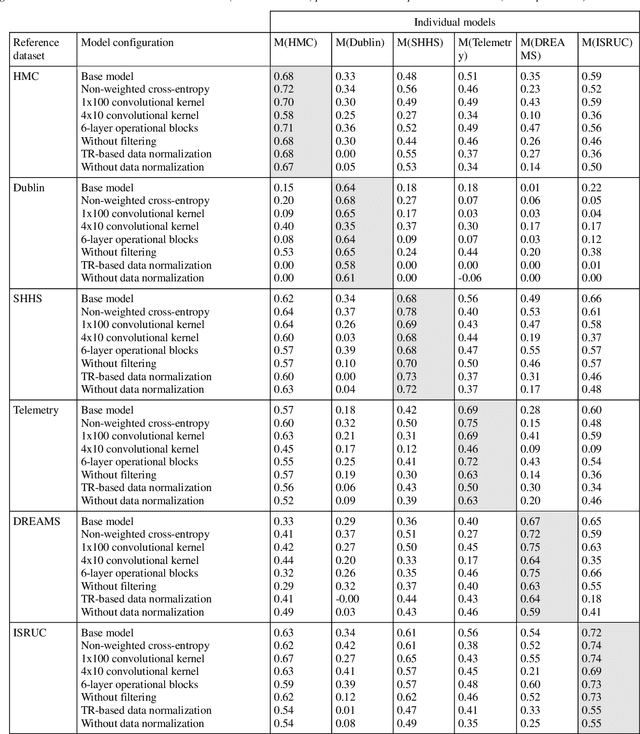

In this work we examine the problematic associated to the development of machine learning models to achieve robust generalization capabilities on common-task multiple-database scenarios. Referred as the ''database variability problem'', we focus on a specific medical domain (sleep staging in Sleep Medicine) to show the non-triviality of translating the estimated model's local generalization capabilities to independent external databases. We analyze some of the scalability problems when multiple-database data are used as input to train a single learning model. Then, we introduce a novel approach based on an ensemble of local models, and we show its advantages in terms of inter-database generalization performance and data scalability. Further on, we analyze different model configurations and data pre-processing techniques to evaluate their effects over the overall generalization performance. For this purpose we carry out experimentation involving several sleep databases evaluating different machine learning models based on Convolutional Neural Networks

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge