Cross-Modal Distillation in Industrial Anomaly Detection: Exploring Efficient Multi-Modal IAD

Paper and Code

May 22, 2024

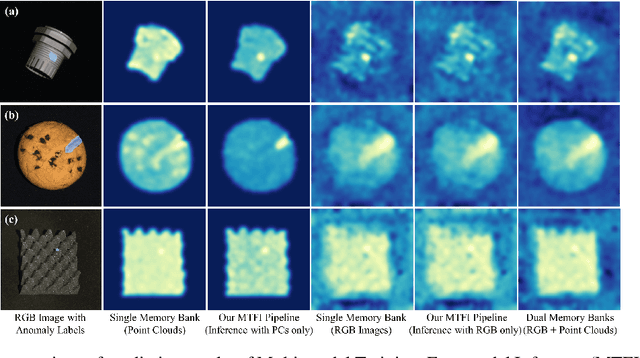

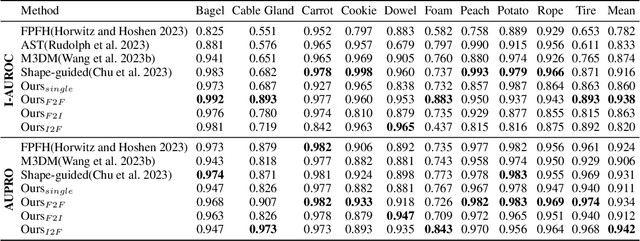

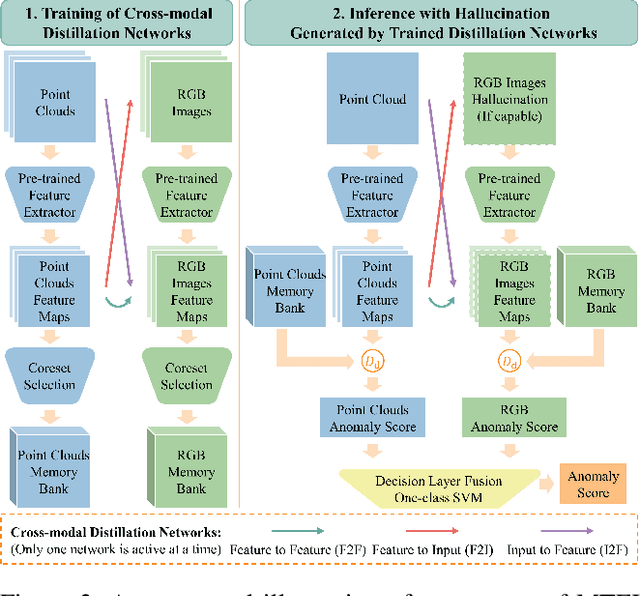

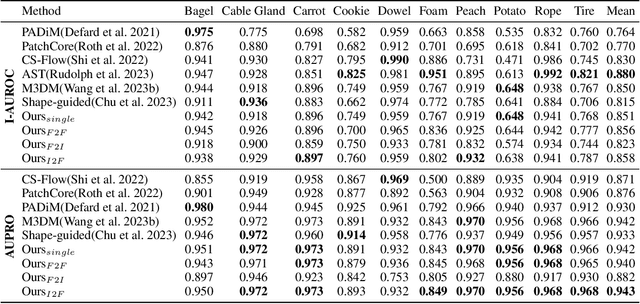

Recent studies of multi-modal Industrial Anomaly Detection (IAD) based on point clouds and RGB images indicated the importance of exploiting redundancy and complementarity among modalities for accurate classification and segmentation. However, achieving multi-modal IAD in practical production lines remains a work in progress that requires consideration of the trade-offs between costs and benefits associated with introducing new modalities, while ensuring compatibility with current processes. Combining fast in-line inspections with high-resolution, time-consuming, near-line characterization techniques to enhance detection accuracy fits well into the existing quality control process, but only part of the samples can be tested with expensive near-line methods. Thus, the model must have the ability to leverage multi-modal training and handle incomplete modalities during inference. One solution is generating cross-modal hallucination to transfer knowledge among modalities for missing modality issues. In this paper, we propose CMDIAD, a Cross-Modal Distillation framework for IAD to demonstrate the feasibility of Multi-modal Training, Few-modal Inference pipeline. Moreover, we investigate reasons behind the asymmetric performance improvement using point clouds or RGB images as main modality of inference. This lays the foundation of our future multi-modal dataset construction for efficient IAD from manufacturing scenarios.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge