Cross-Domain Separable Translation Network for Multimodal Image Change Detection

Paper and Code

Jul 23, 2024

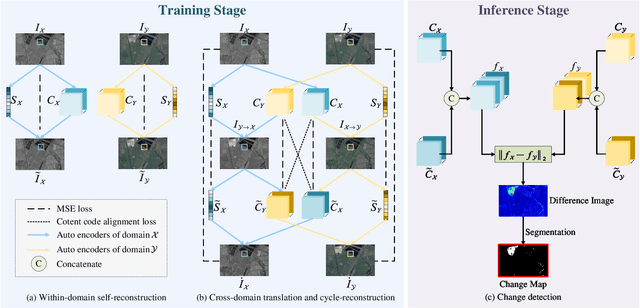

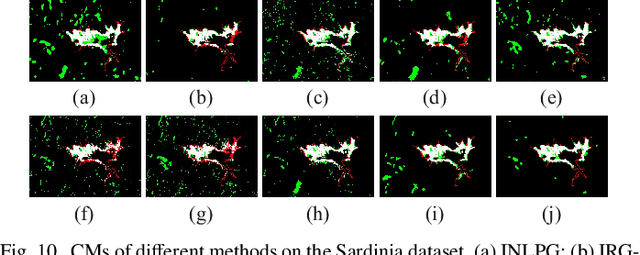

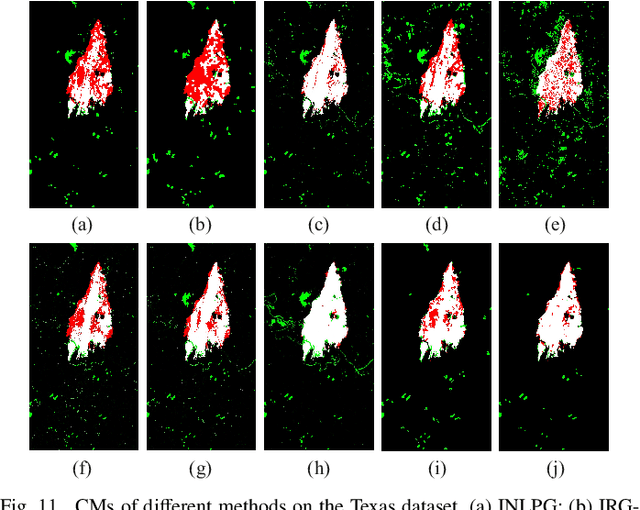

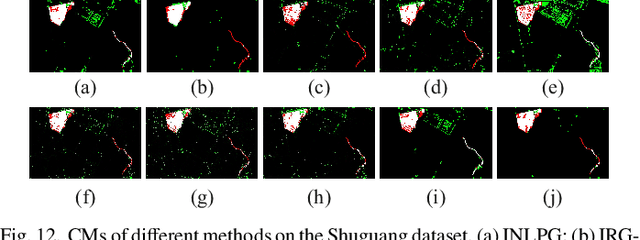

In the remote sensing community, multimodal change detection (MCD) is particularly critical due to its ability to track changes across different imaging conditions and sensor types, making it highly applicable to a wide range of real-world scenarios. This paper focuses on addressing the challenges of MCD, especially the difficulty in comparing images from different sensors with varying styles and statistical characteristics of geospatial objects. Traditional MCD methods often struggle with these variations, leading to inaccurate and unreliable results. To overcome these limitations, a novel unsupervised cross-domain separable translation network (CSTN) is proposed, which uniquely integrates a within-domain self-reconstruction and a cross-domain image translation and cycle-reconstruction workflow with change detection constraints. The model is optimized by implementing both the tasks of image translation and MCD simultaneously, thereby guaranteeing the comparability of learned features from multimodal images. Specifically, a simple yet efficient dual-branch convolutional architecture is employed to separate the content and style information of multimodal images. This process generates a style-independent content-comparable feature space, which is crucial for achieving accurate change detection even in the presence of significant sensor variations. Extensive experimental results demonstrate the effectiveness of the proposed method, showing remarkable improvements over state-of-the-art approaches in terms of accuracy and efficacy for MCD. The implementation of our method will be publicly available at \url{https://github.com/OMEGA-RS/CSTN}

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge