Conformal Methods for Quantifying Uncertainty in Spatiotemporal Data: A Survey

Paper and Code

Sep 08, 2022

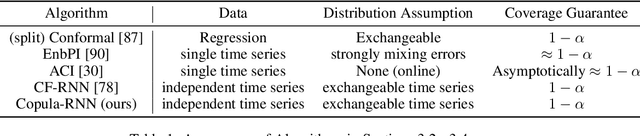

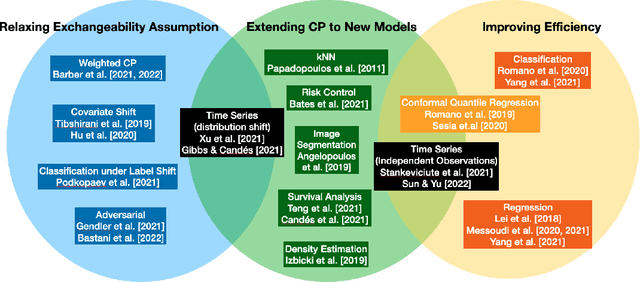

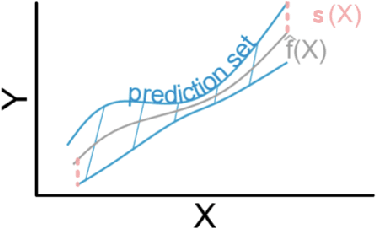

Machine learning methods are increasingly widely used in high-risk settings such as healthcare, transportation, and finance. In these settings, it is important that a model produces calibrated uncertainty to reflect its own confidence and avoid failures. In this paper we survey recent works on uncertainty quantification (UQ) for deep learning, in particular distribution-free Conformal Prediction method for its mathematical properties and wide applicability. We will cover the theoretical guarantees of conformal methods, introduce techniques that improve calibration and efficiency for UQ in the context of spatiotemporal data, and discuss the role of UQ in the context of safe decision making.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge