Computationally Efficient Feature Significance and Importance for Machine Learning Models

Paper and Code

May 23, 2019

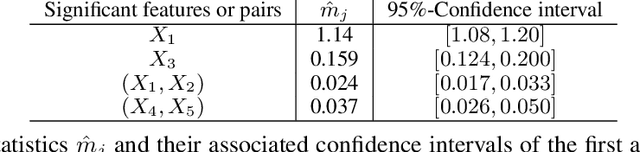

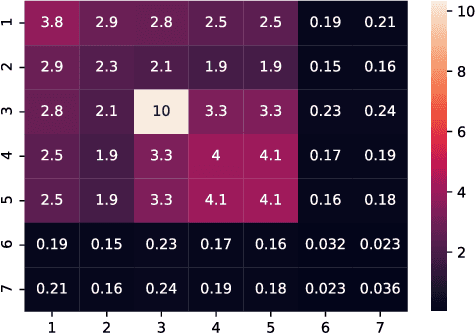

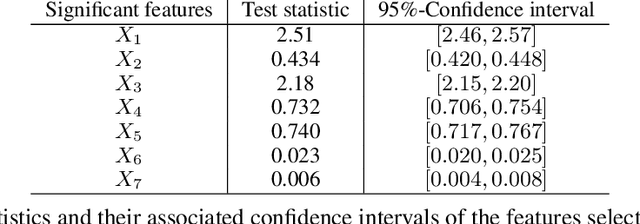

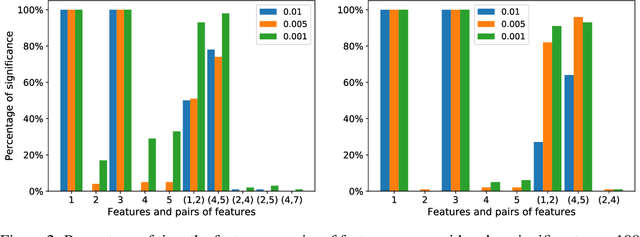

We develop a simple and computationally efficient significance test for the features of a machine learning model. Our forward-selection approach applies to any model specification, learning task and variable type. The test is non-asymptotic, straightforward to implement, and does not require model refitting. It identifies the statistically significant features as well as feature interactions of any order in a hierarchical manner, and generates a model-free notion of feature importance. Numerical results illustrate its performance.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge