Class-Specific Variational Auto-Encoder for Content-Based Image Retrieval

Paper and Code

Apr 23, 2023

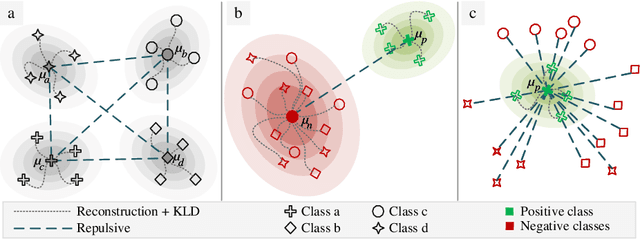

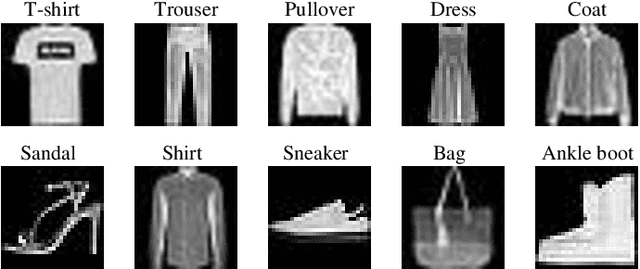

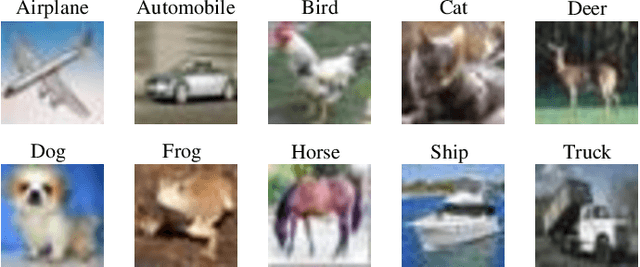

Using a discriminative representation obtained by supervised deep learning methods showed promising results on diverse Content-Based Image Retrieval (CBIR) problems. However, existing methods exploiting labels during training try to discriminate all available classes, which is not ideal in cases where the retrieval problem focuses on a class of interest. In this paper, we propose a regularized loss for Variational Auto-Encoders (VAEs) forcing the model to focus on a given class of interest. As a result, the model learns to discriminate the data belonging to the class of interest from any other possibility, making the learnt latent space of the VAE suitable for class-specific retrieval tasks. The proposed Class-Specific Variational Auto-Encoder (CS-VAE) is evaluated on three public and one custom datasets, and its performance is compared with that of three related VAE-based methods. Experimental results show that the proposed method outperforms its competition in both in-domain and out-of-domain retrieval problems.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge