Breccia and basalt classification of thin sections of Apollo rocks with deep learning

Paper and Code

Oct 28, 2024

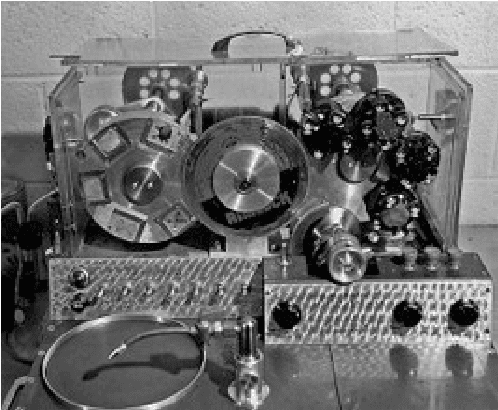

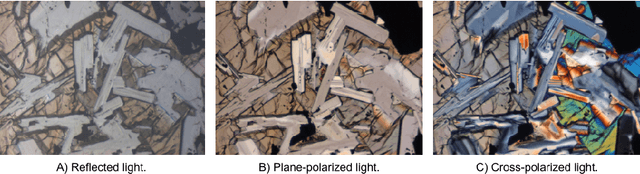

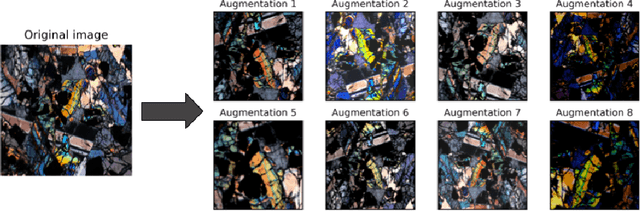

Human exploration of the moon is expected to resume in the next decade, following the last such activities in the Apollo programme time. One of the major objectives of returning to the Moon is to continue retrieving geological samples, with a focus on collecting high-quality specimens to maximize scientific return. Tools that assist astronauts in making informed decisions about sample collection activities can maximize the scientific value of future lunar missions. A lunar rock classifier is a tool that can potentially provide the necessary information for astronauts to analyze lunar rock samples, allowing them to augment in-situ value identification of samples. Towards demonstrating the value of such a tool, in this paper, we introduce a framework for classifying rock types in thin sections of lunar rocks. We leverage the vast collection of petrographic thin-section images from the Apollo missions, captured under plane-polarized light (PPL), cross-polarised light (XPL), and reflected light at varying magnifications. Advanced machine learning methods, including contrastive learning, are applied to analyze these images and extract meaningful features. The contrastive learning approach fine-tunes a pre-trained Inception-Resnet-v2 network with the SimCLR loss function. The fine-tuned Inception-Resnet-v2 network can then extract essential features effectively from the thin-section images of Apollo rocks. A simple binary classifier is trained using transfer learning from the fine-tuned Inception-ResNet-v2 to 98.44\% ($\pm$1.47) accuracy in separating breccias from basalts.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge