Better Image Segmentation by Exploiting Dense Semantic Predictions

Paper and Code

Jun 05, 2016

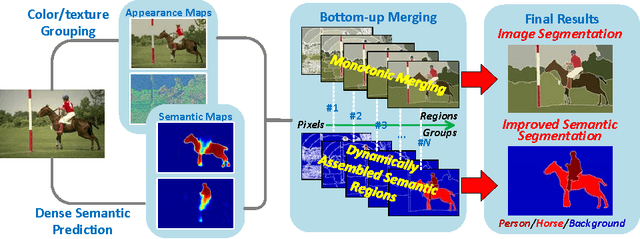

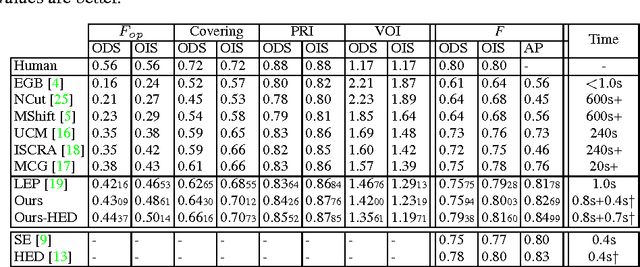

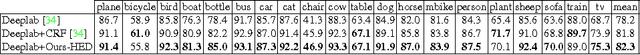

It is well accepted that image segmentation can benefit from utilizing multilevel cues. The paper focuses on utilizing the FCNN-based dense semantic predictions in the bottom-up image segmentation, arguing to take semantic cues into account from the very beginning. By this we can avoid merging regions of similar appearance but distinct semantic categories as possible. The semantic inefficiency problem is handled. We also propose a straightforward way to use the contour cues to suppress the noise in multilevel cues, thus to improve the segmentation robustness. The evaluation on the BSDS500 shows that we obtain the competitive region and boundary performance. Furthermore, since all individual regions can be assigned with appropriate semantic labels during the computation, we are capable of extracting the adjusted semantic segmentations. The experiment on Pascal VOC 2012 shows our improvement to the original semantic segmentations which derives directly from the dense predictions.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge