AniWho : A Quick and Accurate Way to Classify Anime Character Faces in Images

Paper and Code

Aug 24, 2022

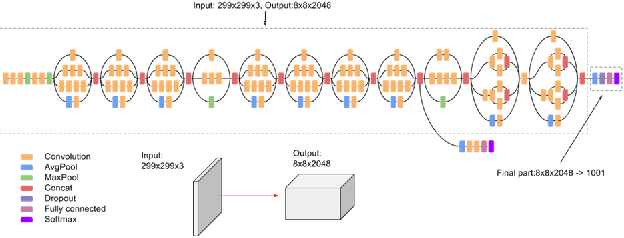

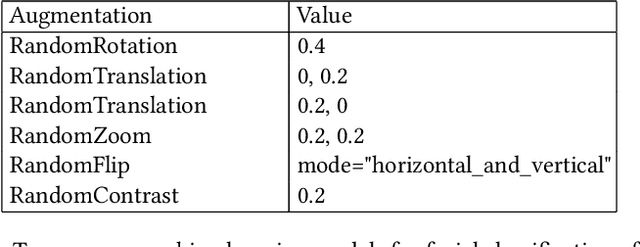

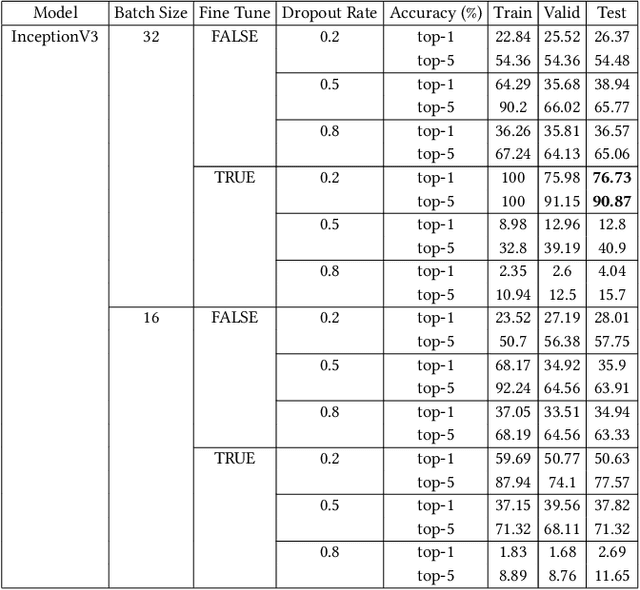

This paper aims to dive more deeply into various models available, including InceptionV3, InceptionResNetV2, MobileNetV2, and EfficientNetB7, using transfer learning to classify Japanese animation-style character faces. This paper has shown that EfficientNet-B7 has the highest accuracy rate with 85.08% top-1 Accuracy, followed by MobileNetV2, having a slightly less accurate result but with the benefits of much lower inference time and fewer number of required parameters. This paper also uses a few-shot learning framework, specifically Prototypical Networks, which produces decent results that can be used as an alternative to traditional transfer learning methods.

* 8 pages, 12 figures, 7 tables

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge