An Ensemble Approach Towards Adversarial Robustness

Paper and Code

Jun 10, 2021

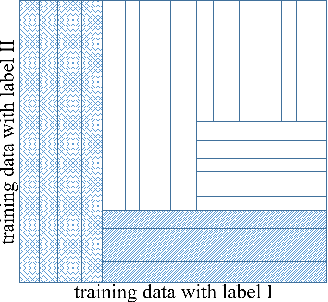

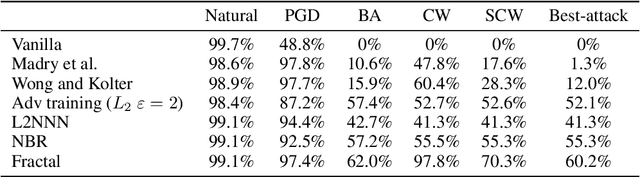

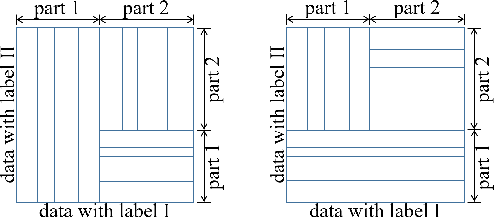

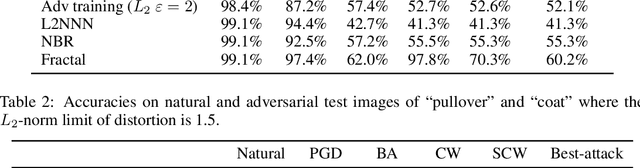

It is a known phenomenon that adversarial robustness comes at a cost to natural accuracy. To improve this trade-off, this paper proposes an ensemble approach that divides a complex robust-classification task into simpler subtasks. Specifically, fractal divide derives multiple training sets from the training data, and fractal aggregation combines inference outputs from multiple classifiers that are trained on those sets. The resulting ensemble classifiers have a unique property that ensures robustness for an input if certain don't-care conditions are met. The new techniques are evaluated on MNIST and Fashion-MNIST, with no adversarial training. The MNIST classifier has 99% natural accuracy, 70% measured robustness and 36.9% provable robustness, within L2 distance of 2. The Fashion-MNIST classifier has 90% natural accuracy, 54.5% measured robustness and 28.2% provable robustness, within L2 distance of 1.5. Both results are new state of the art, and we also present new state-of-the-art binary results on challenging label-pairs.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge