Airfoil Shape Optimization using Deep Q-Network

Paper and Code

Nov 29, 2022

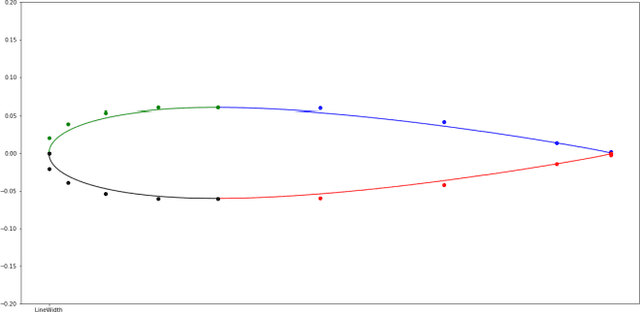

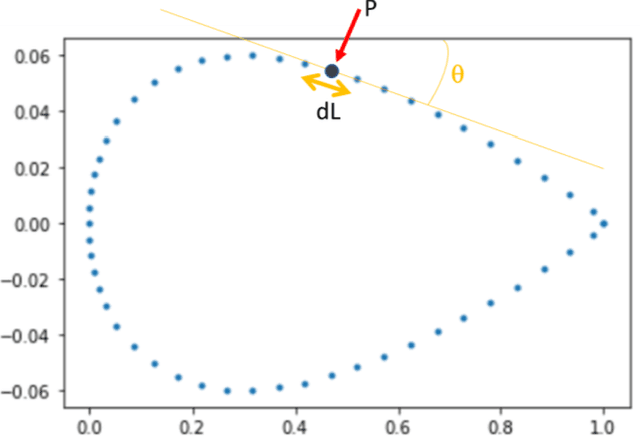

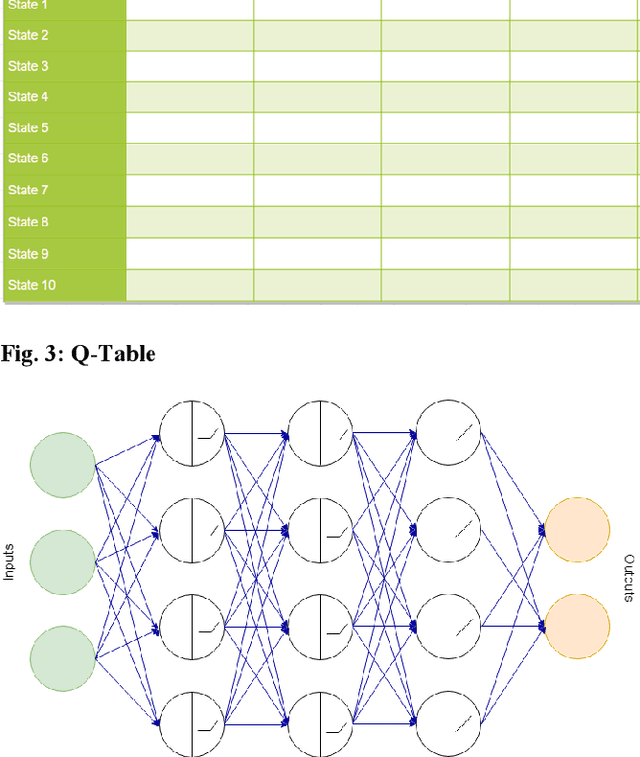

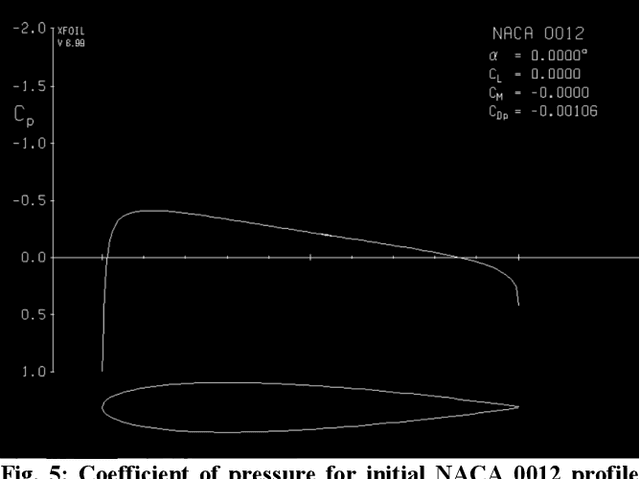

The feasibility of using reinforcement learning for airfoil shape optimization is explored. Deep Q-Network (DQN) is used over Markov's decision process to find the optimal shape by learning the best changes to the initial shape for achieving the required goal. The airfoil profile is generated using Bezier control points to reduce the number of control variables. The changes in the position of control points are restricted to the direction normal to the chordline so as to reduce the complexity of optimization. The process is designed as a search for an episode of change done to each control point of a profile. The DQN essentially learns the episode of best changes by updating the temporal difference of the Bellman Optimality Equation. The drag and lift coefficients are calculated from the distribution of pressure coefficient along the profile computed using XFoil potential flow solver. These coefficients are used to give a reward to every change during the learning process where the ultimate aim stands to maximize the cumulate reward of an episode.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge