AggSS: An Aggregated Self-Supervised Approach for Class-Incremental Learning

Paper and Code

Aug 08, 2024

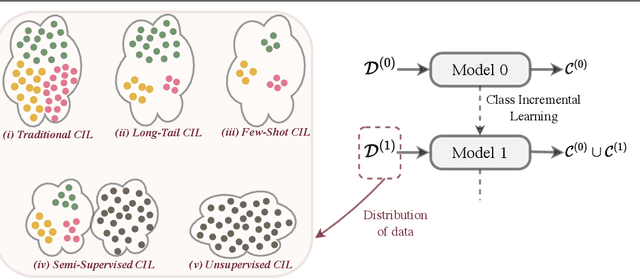

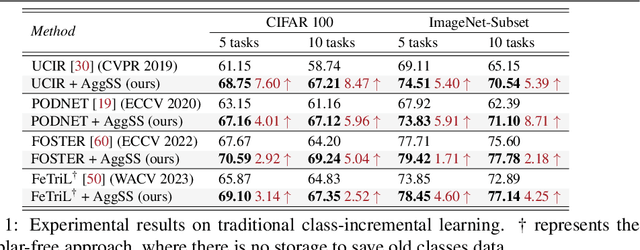

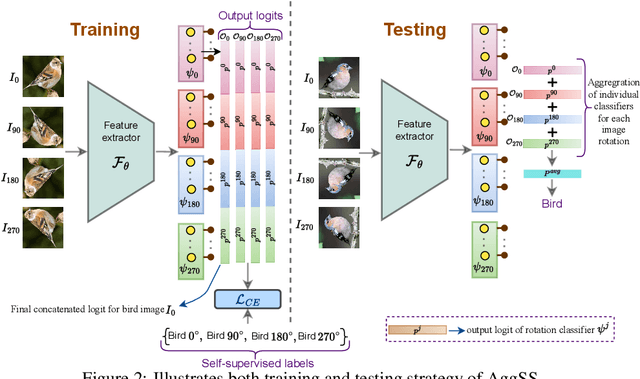

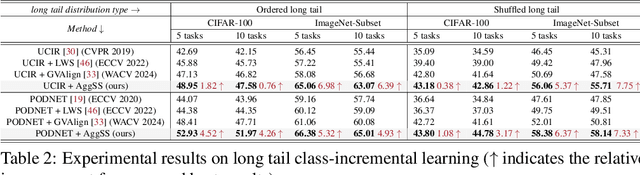

This paper investigates the impact of self-supervised learning, specifically image rotations, on various class-incremental learning paradigms. Here, each image with a predefined rotation is considered as a new class for training. At inference, all image rotation predictions are aggregated for the final prediction, a strategy we term Aggregated Self-Supervision (AggSS). We observe a shift in the deep neural network's attention towards intrinsic object features as it learns through AggSS strategy. This learning approach significantly enhances class-incremental learning by promoting robust feature learning. AggSS serves as a plug-and-play module that can be seamlessly incorporated into any class-incremental learning framework, leveraging its powerful feature learning capabilities to enhance performance across various class-incremental learning approaches. Extensive experiments conducted on standard incremental learning datasets CIFAR-100 and ImageNet-Subset demonstrate the significant role of AggSS in improving performance within these paradigms.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge