Age and gender bias in pedestrian detection algorithms

Paper and Code

Jun 25, 2019

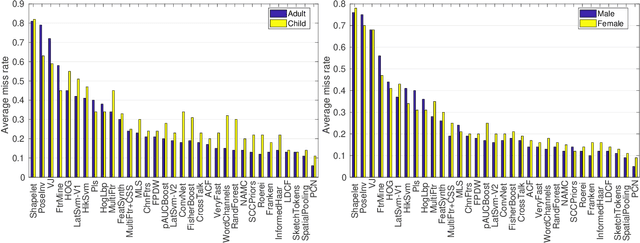

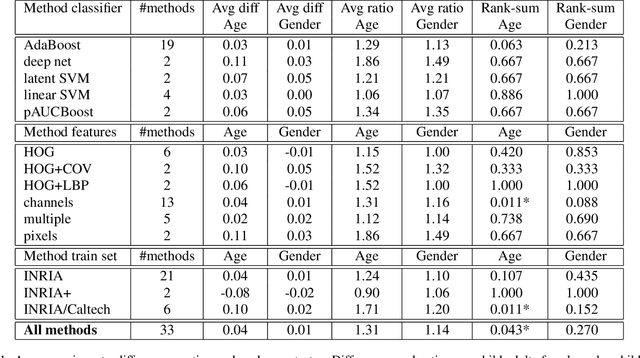

Pedestrian detection algorithms are important components of mobile robots, such as autonomous vehicles, which directly relate to human safety. Performance disparities in these algorithms could translate into disparate impact in the form of biased accident outcomes. To evaluate the need for such concerns, we characterize the age and gender bias in the performance of state-of-the-art pedestrian detection algorithms. Our analysis is based on the INRIA Person Dataset extended with child, adult, male and female labels. We show that all of the 24 top-performing methods of the Caltech Pedestrian Detection Benchmark have higher miss rates on children. The difference is significant and we analyse how it varies with the classifier, features and training data used by the methods. Algorithms were also gender-biased on average but the performance differences were not significant. We discuss the source of the bias, the ethical implications, possible technical solutions and barriers.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge