Accelerating the Development of Multimodal, Integrative-AI Systems with Platform for Situated Intelligence

Paper and Code

Oct 12, 2020

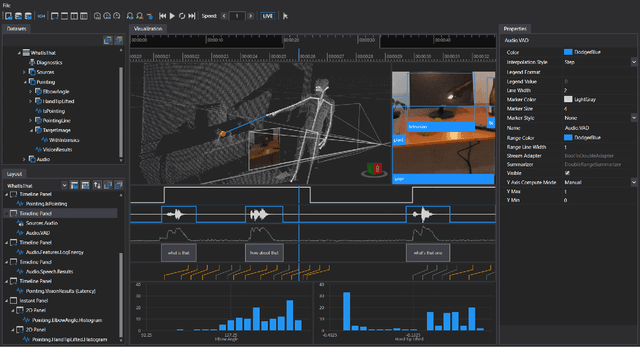

We describe Platform for Situated Intelligence, an open-source framework for multimodal, integrative-AI systems. The framework provides infrastructure, tools, and components that enable and accelerate the development of applications that process multimodal streams of data and in which timing is critical. The framework is particularly well-suited for developing physically situated interactive systems that perceive and reason about their surroundings in order to better interact with people, such as social robots, virtual assistants, smart meeting rooms, etc. In this paper, we provide a brief, high-level overview of the framework and its main affordances, and discuss its implications for HRI.

* 5 pages, 1 figure. Submitted to the 2020 AAAI Fall Symposium: Trust

and Explainability in Artificial Intelligence for Human-Robot Interaction

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge