A Survey on Understanding, Visualizations, and Explanation of Deep Neural Networks

Paper and Code

Feb 02, 2021

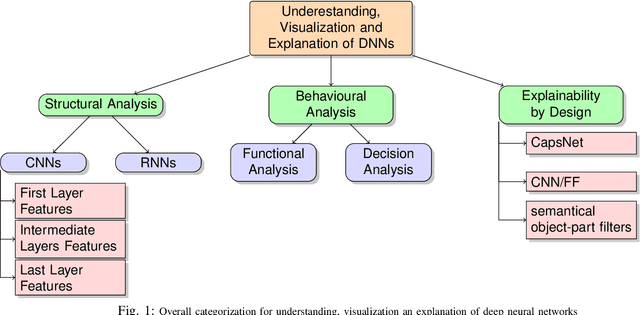

Recent advancements in machine learning and signal processing domains have resulted in an extensive surge of interest in Deep Neural Networks (DNNs) due to their unprecedented performance and high accuracy for different and challenging problems of significant engineering importance. However, when such deep learning architectures are utilized for making critical decisions such as the ones that involve human lives (e.g., in control systems and medical applications), it is of paramount importance to understand, trust, and in one word "explain" the argument behind deep models' decisions. In many applications, artificial neural networks (including DNNs) are considered as black-box systems, which do not provide sufficient clue on their internal processing actions. Although some recent efforts have been initiated to explain the behaviors and decisions of deep networks, explainable artificial intelligence (XAI) domain, which aims at reasoning about the behavior and decisions of DNNs, is still in its infancy. The aim of this paper is to provide a comprehensive overview on Understanding, Visualization, and Explanation of the internal and overall behavior of DNNs.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge