A Sentiment Analysis of Medical Text Based on Deep Learning

Paper and Code

Apr 16, 2024

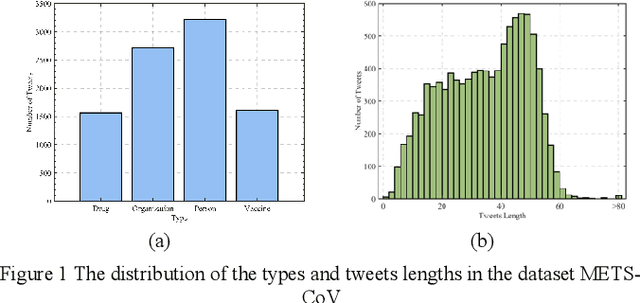

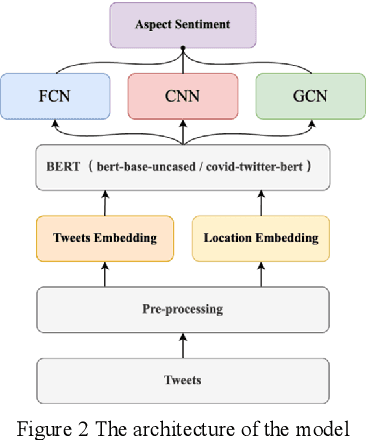

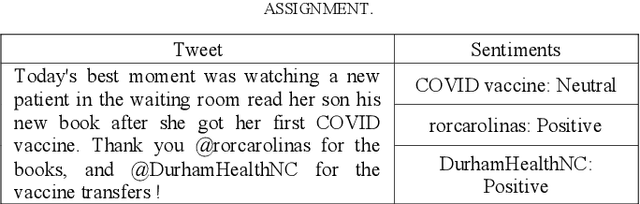

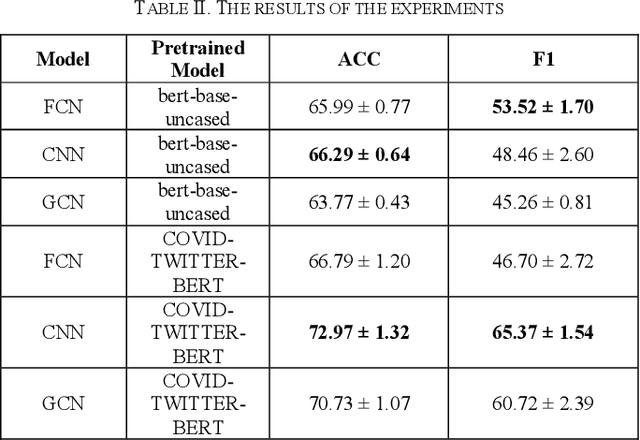

The field of natural language processing (NLP) has made significant progress with the rapid development of deep learning technologies. One of the research directions in text sentiment analysis is sentiment analysis of medical texts, which holds great potential for application in clinical diagnosis. However, the medical field currently lacks sufficient text datasets, and the effectiveness of sentiment analysis is greatly impacted by different model design approaches, which presents challenges. Therefore, this paper focuses on the medical domain, using bidirectional encoder representations from transformers (BERT) as the basic pre-trained model and experimenting with modules such as convolutional neural network (CNN), fully connected network (FCN), and graph convolutional networks (GCN) at the output layer. Experiments and analyses were conducted on the METS-CoV dataset to explore the training performance after integrating different deep learning networks. The results indicate that CNN models outperform other networks when trained on smaller medical text datasets in combination with pre-trained models like BERT. This study highlights the significance of model selection in achieving effective sentiment analysis in the medical domain and provides a reference for future research to develop more efficient model architectures.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge