A Secure Federated Learning Framework for Residential Short Term Load Forecasting

Paper and Code

Sep 29, 2022

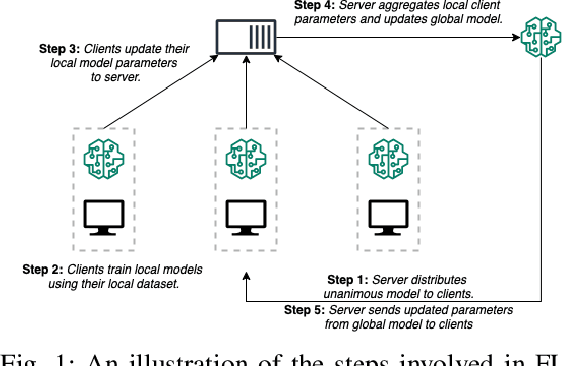

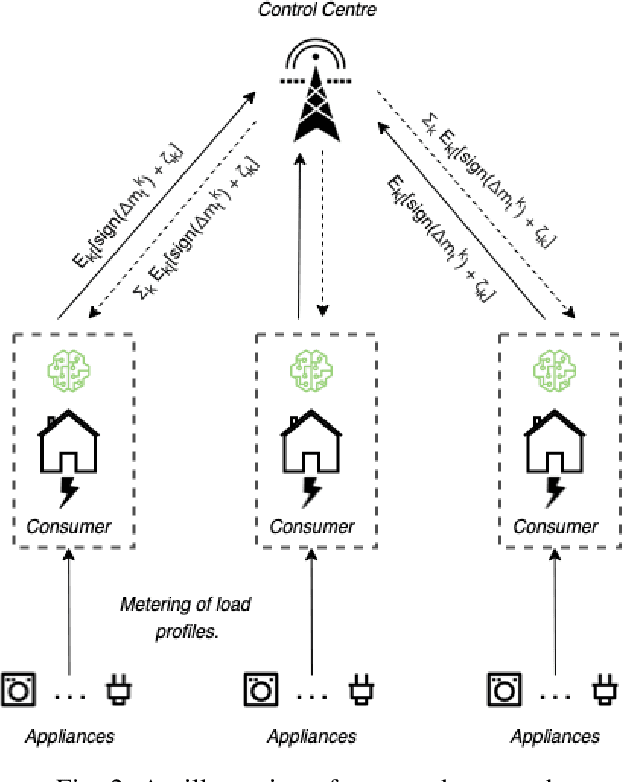

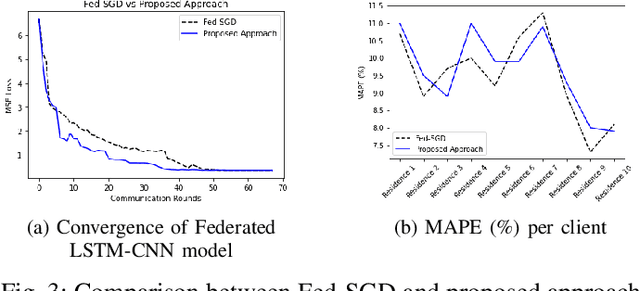

Smart meter measurements, though critical for accurate demand forecasting, face several drawbacks including consumers' privacy, data breach issues, to name a few. Recent literature has explored Federated Learning (FL) as a promising privacy-preserving machine learning alternative which enables collaborative learning of a model without exposing private raw data for short term load forecasting. Despite its virtue, standard FL is still vulnerable to an intractable cyber threat known as Byzantine attack carried out by faulty and/or malicious clients. Therefore, to improve the robustness of federated short-term load forecasting against Byzantine threats, we develop a state-of-the-art differentially private secured FL-based framework that ensures the privacy of the individual smart meter's data while protect the security of FL models and architecture. Our proposed framework leverages the idea of gradient quantization through the Sign Stochastic Gradient Descent (SignSGD) algorithm, where the clients only transmit the `sign' of the gradient to the control centre after local model training. As we highlight through our experiments involving benchmark neural networks with a set of Byzantine attack models, our proposed approach mitigates such threats quite effectively and thus outperforms conventional Fed-SGD models.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge