A Reinforcement Learning Approach to Health Aware Control Strategy

Paper and Code

Oct 19, 2020

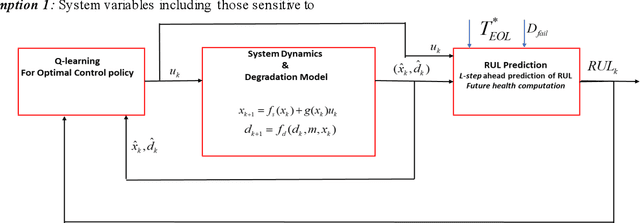

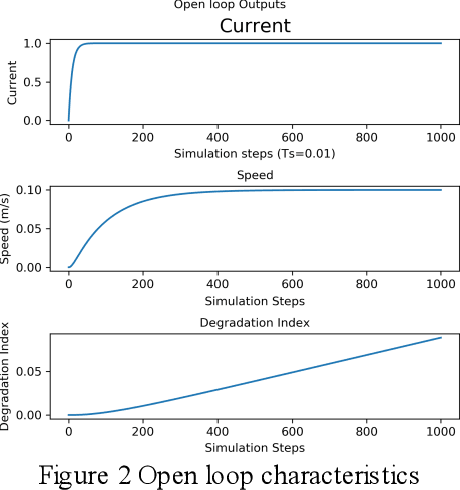

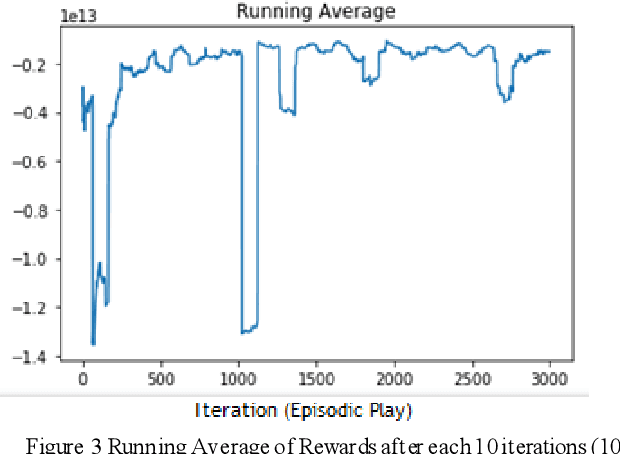

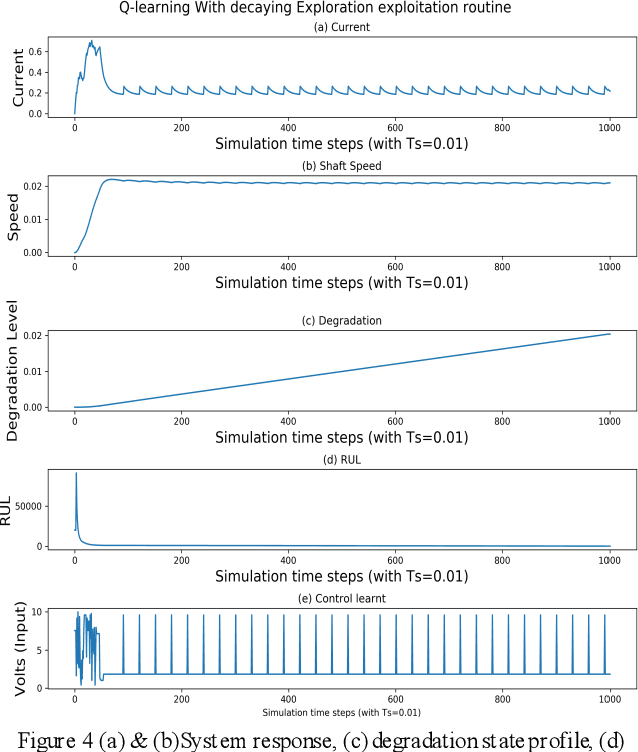

Health-aware control (HAC) has emerged as one of the domains where control synthesis is sought based upon the failure prognostics of system/component or the Remaining Useful Life (RUL) predictions of critical components. The fact that mathematical dynamic (transition) models of RUL are rarely available, makes it difficult for RUL information to be incorporated into the control paradigm. A novel framework for health aware control is presented in this paper where reinforcement learning based approach is used to learn an optimal control policy in face of component degradation by integrating global system transition data (generated by an analytical model that mimics the real system) and RUL predictions. The RUL predictions generated at each step, is tracked to a desired value of RUL. The latter is integrated within a cost function which is maximized to learn the optimal control. The proposed method is studied using simulation of a DC motor and shaft wear.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge