A Multiobjective Reinforcement Learning Framework for Microgrid Energy Management

Paper and Code

Jul 17, 2023

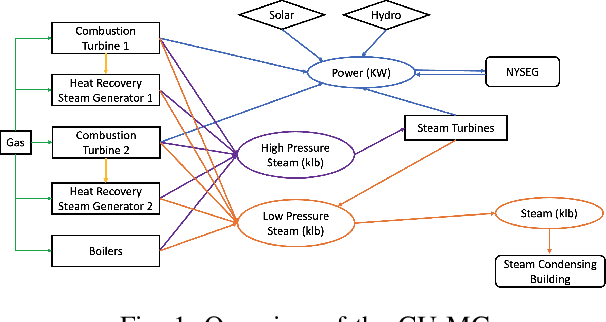

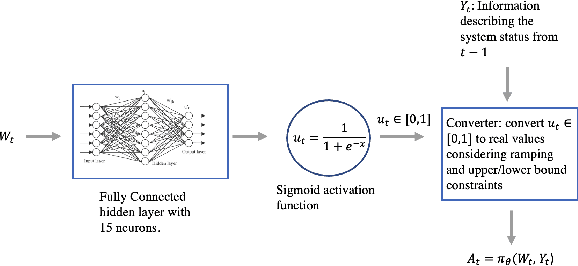

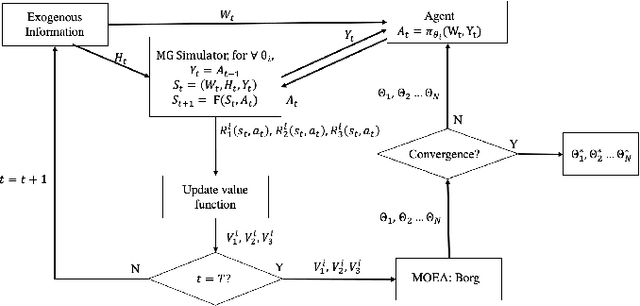

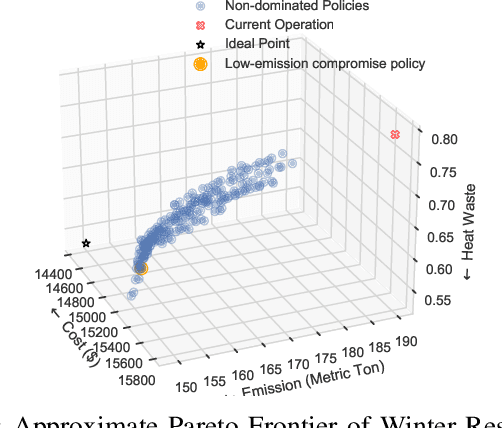

The emergence of microgrids (MGs) has provided a promising solution for decarbonizing and decentralizing the power grid, mitigating the challenges posed by climate change. However, MG operations often involve considering multiple objectives that represent the interests of different stakeholders, leading to potentially complex conflicts. To tackle this issue, we propose a novel multi-objective reinforcement learning framework that explores the high-dimensional objective space and uncovers the tradeoffs between conflicting objectives. This framework leverages exogenous information and capitalizes on the data-driven nature of reinforcement learning, enabling the training of a parametric policy without the need for long-term forecasts or knowledge of the underlying uncertainty distribution. The trained policies exhibit diverse, adaptive, and coordinative behaviors with the added benefit of providing interpretable insights on the dynamics of their information use. We employ this framework on the Cornell University MG (CU-MG), which is a combined heat and power MG, to evaluate its effectiveness. The results demonstrate performance improvements in all objectives considered compared to the status quo operations and offer more flexibility in navigating complex operational tradeoffs.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge