A Computer Vision System for Attention Mapping in SLAM based 3D Models

Paper and Code

May 06, 2013

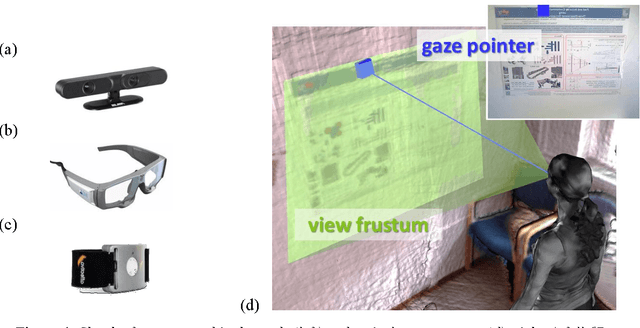

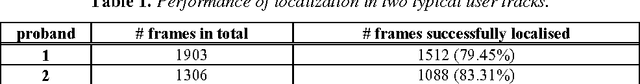

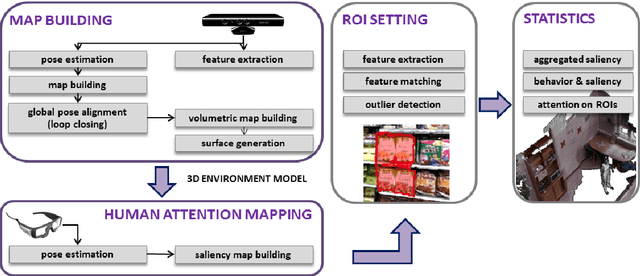

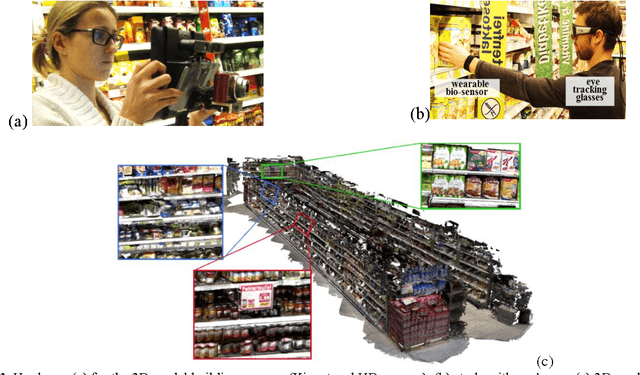

The study of human factors in the frame of interaction studies has been relevant for usability engi-neering and ergonomics for decades. Today, with the advent of wearable eye-tracking and Google glasses, monitoring of human factors will soon become ubiquitous. This work describes a computer vision system that enables pervasive mapping and monitoring of human attention. The key contribu-tion is that our methodology enables full 3D recovery of the gaze pointer, human view frustum and associated human centred measurements directly into an automatically computed 3D model in real-time. We apply RGB-D SLAM and descriptor matching methodologies for the 3D modelling, locali-zation and fully automated annotation of ROIs (regions of interest) within the acquired 3D model. This innovative methodology will open new avenues for attention studies in real world environments, bringing new potential into automated processing for human factors technologies.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge