A Comprehensive Comparison between Neural Style Transfer and Universal Style Transfer

Paper and Code

Jun 03, 2018

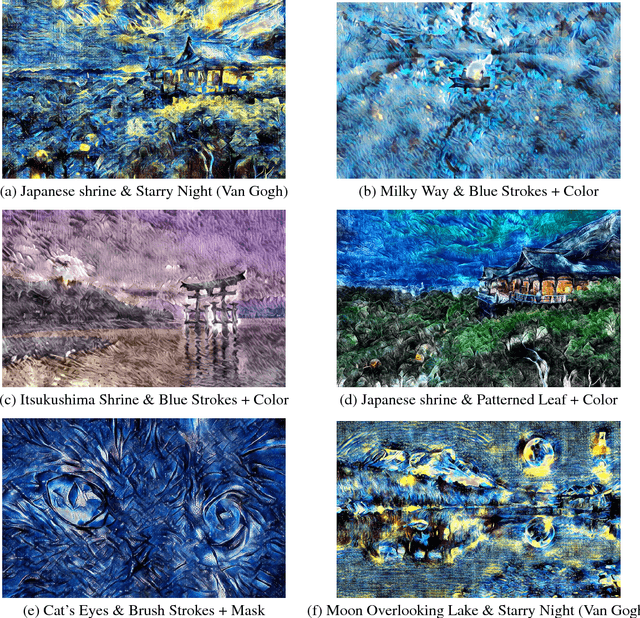

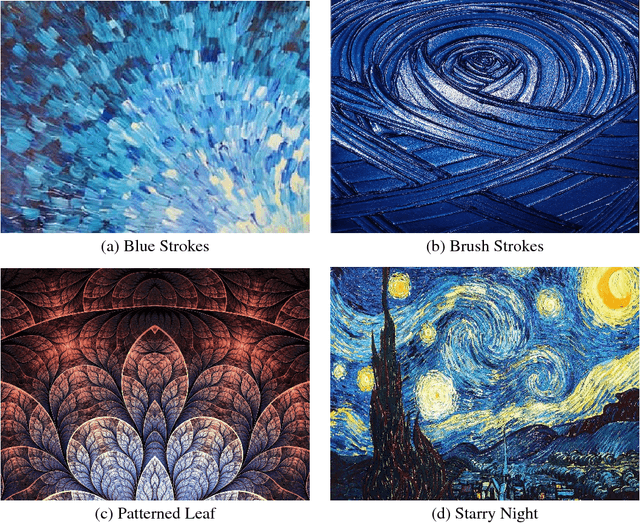

Style transfer aims to transfer arbitrary visual styles to content images. We explore algorithms adapted from two papers that try to solve the problem of style transfer while generalizing on unseen styles or compromised visual quality. Majority of the improvements made focus on optimizing the algorithm for real-time style transfer while adapting to new styles with considerably less resources and constraints. We compare these strategies and compare how they measure up to produce visually appealing images. We explore two approaches to style transfer: neural style transfer with improvements and universal style transfer. We also make a comparison between the different images produced and how they can be qualitatively measured.

* 9 pages, 4 figures

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge